Top 21 Big Data Tools That Empower Data Wizards

ProjectPro

JUNE 6, 2025

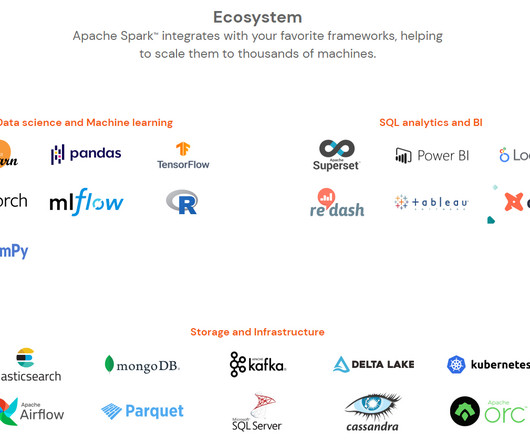

Source Code: Build a Similar Image Finder Top 3 Open Source Big Data Tools This section consists of three leading open-source big data tools- Apache Spark , Apache Hadoop, and Apache Kafka. In Hadoop clusters , Spark apps can operate up to 10 times faster on disk. Hadoop, created by Doug Cutting and Michael J.

Let's personalize your content