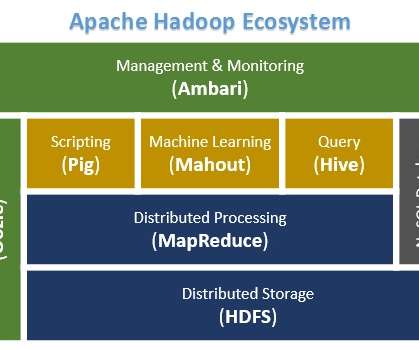

Hadoop Ecosystem Components and Its Architecture

ProjectPro

JUNE 4, 2015

All the components of the Hadoop ecosystem, as explicit entities are evident. All the components of the Hadoop ecosystem, as explicit entities are evident. The holistic view of Hadoop architecture gives prominence to Hadoop common, Hadoop YARN, Hadoop Distributed File Systems (HDFS ) and Hadoop MapReduce of the Hadoop Ecosystem.

Let's personalize your content