A Dive into the Basics of Big Data Storage with HDFS

Analytics Vidhya

FEBRUARY 6, 2023

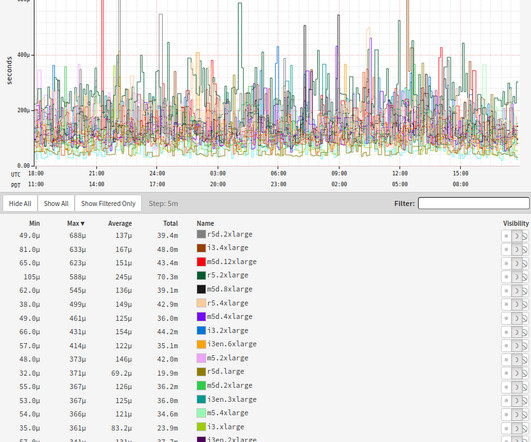

It provides high-throughput access to data and is optimized for […] The post A Dive into the Basics of Big Data Storage with HDFS appeared first on Analytics Vidhya. It is a core component of the Apache Hadoop ecosystem and allows for storing and processing large datasets across multiple commodity servers.

Let's personalize your content