Is Apache Iceberg the New Hadoop? Navigating the Complexities of Modern Data Lakehouses

Data Engineering Weekly

MARCH 5, 2025

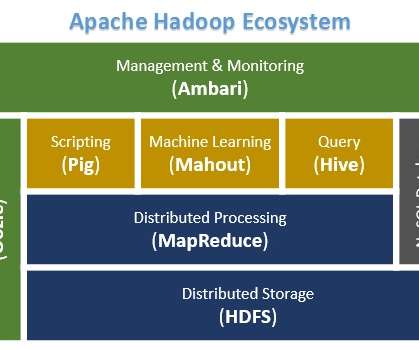

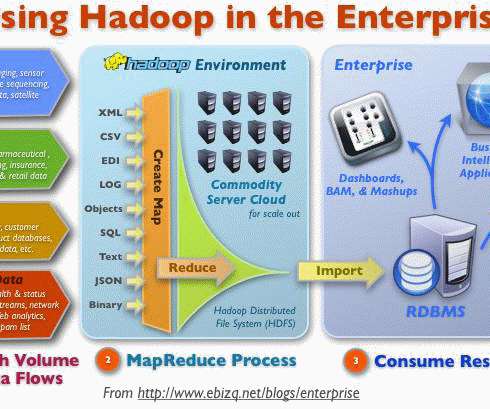

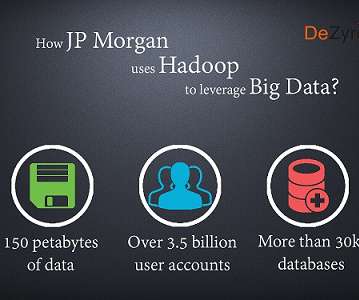

But is it truly revolutionary, or is it destined to repeat the pitfalls of past solutions like Hadoop? Danny authored a thought-provoking article comparing Iceberg to Hadoop , not on a purely technical level, but in terms of their hype cycles, implementation challenges, and the surrounding ecosystems.

Let's personalize your content