Top 8 Hadoop Projects to Work in 2024

Knowledge Hut

DECEMBER 28, 2023

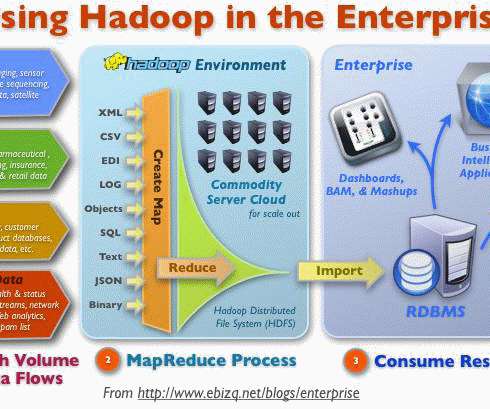

Imagine having a framework capable of handling large amounts of data with reliability, scalability, and cost-effectiveness. That's where Hadoop comes into the picture. Hadoop is a popular open-source framework that stores and processes large datasets in a distributed manner. Why Are Hadoop Projects So Important?

Let's personalize your content