Pushing The Limits Of Scalability And User Experience For Data Processing WIth Jignesh Patel

Data Engineering Podcast

JANUARY 7, 2024

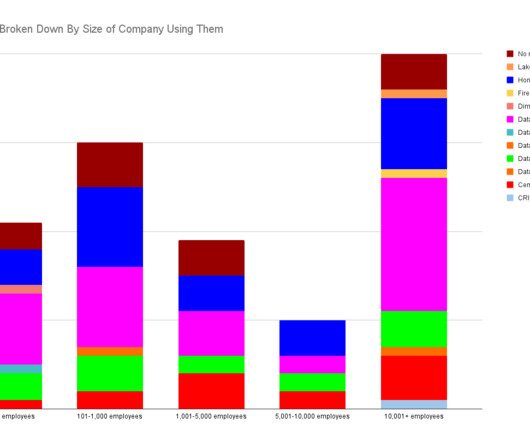

Summary Data processing technologies have dramatically improved in their sophistication and raw throughput. Unfortunately, the volumes of data that are being generated continue to double, requiring further advancements in the platform capabilities to keep up.

Let's personalize your content