Data Warehouse vs Data Lake vs Data Lakehouse: Definitions, Similarities, and Differences

Monte Carlo

AUGUST 25, 2023

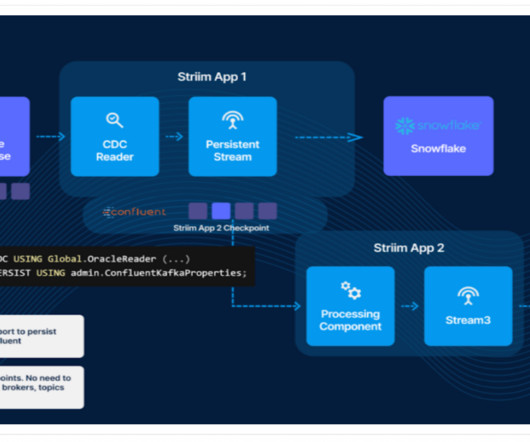

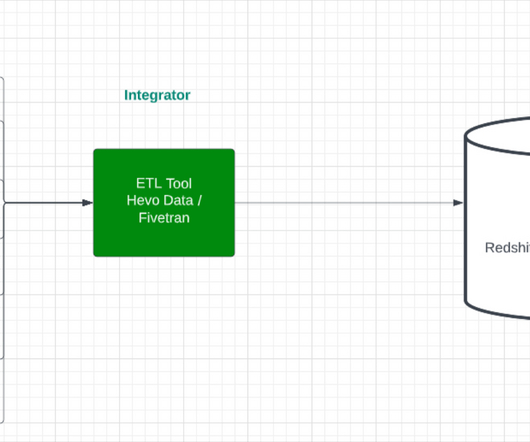

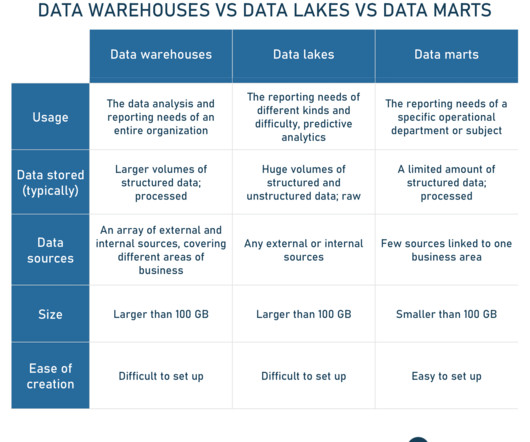

The inception of the data lakehouse came about as cloud warehouse providers began adding features ordinarily associated with lakes, as seen in platforms like Redshift Spectrum and Delta Lake. Conversely, data lakes began incorporating warehouse-like features, such as including SQL functionality and schema definitions.

Let's personalize your content