They Handle 500B Events Daily. Here’s Their Data Engineering Architecture.

Monte Carlo

NOVEMBER 12, 2024

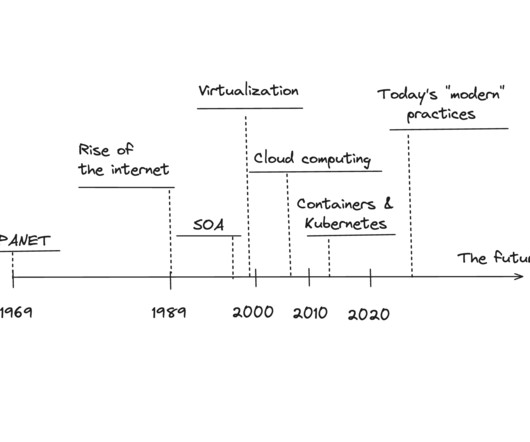

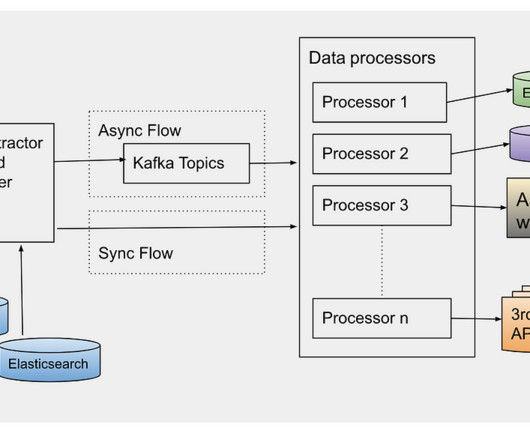

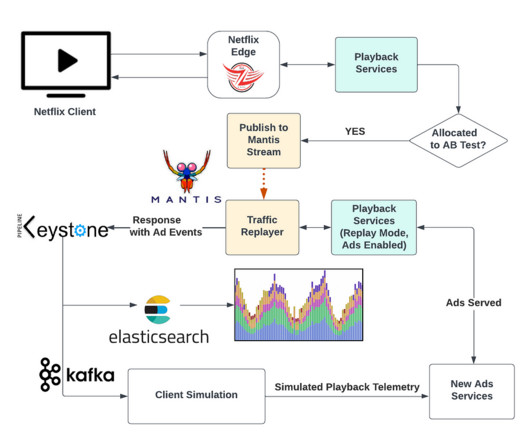

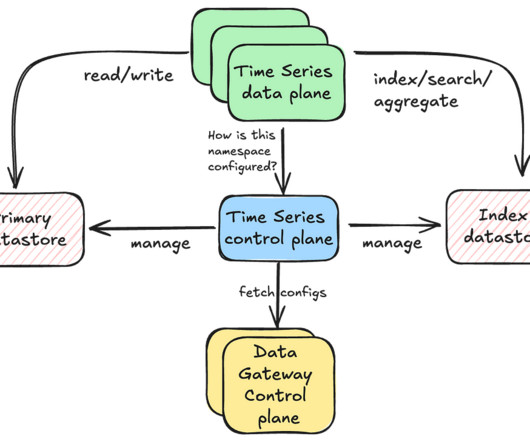

A data engineering architecture is the structural framework that determines how data flows through an organization – from collection and storage to processing and analysis. And who better to learn from than the tech giants who process more data before breakfast than most companies see in a year?

Let's personalize your content