Talend ETL Tool - A Comprehensive Guide [2025]

ProjectPro

JUNE 6, 2025

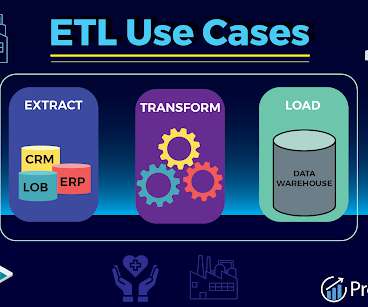

Looking for the best ETL tool in the market for your big data projects ? Talend ETL tool is your one-stop solution! Let us put first things first and begin with a brief introduction to the Talend ETL tool. Table of Contents What is Talend ETL? Why Use Talend ETL Tool For Big Data Projects?

Let's personalize your content