Data Migration Strategies For Large Scale Systems

Data Engineering Podcast

MAY 26, 2024

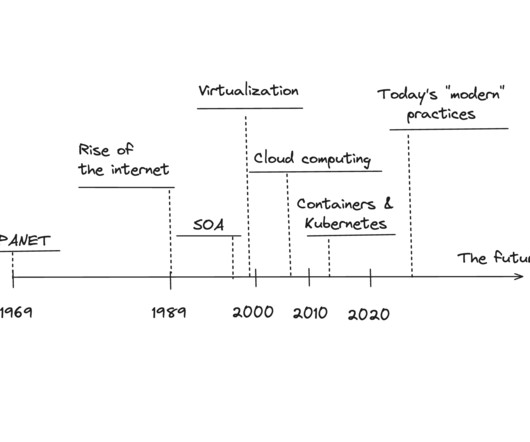

Summary Any software system that survives long enough will require some form of migration or evolution. When that system is responsible for the data layer the process becomes more challenging. Sriram Panyam has been involved in several projects that required migration of large volumes of data in high traffic environments.

Let's personalize your content