Data logs: The latest evolution in Meta’s access tools

Engineering at Meta

FEBRUARY 4, 2025

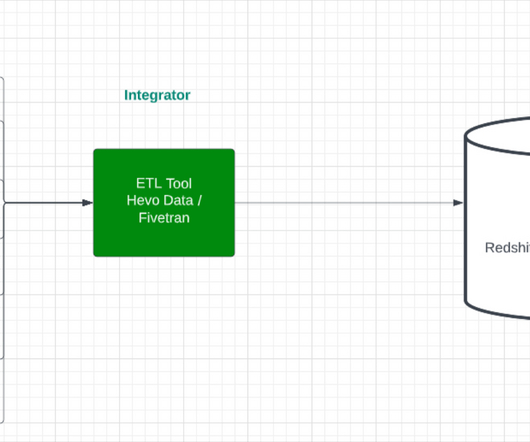

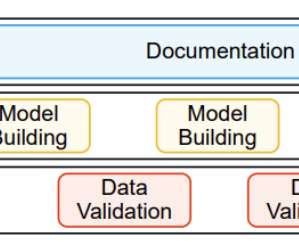

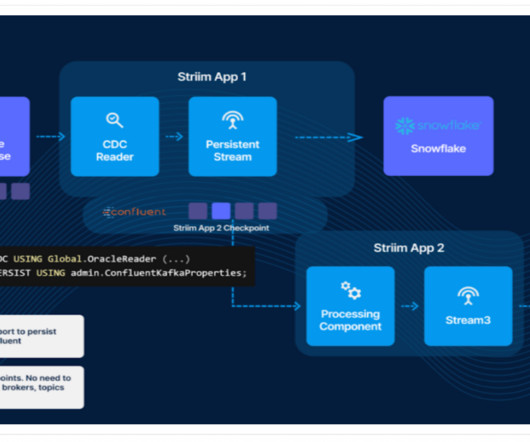

Here we explore initial system designs we considered, an overview of the current architecture, and some important principles Meta takes into account in making data accessible and easy to understand. Users have a variety of tools they can use to manage and access their information on Meta platforms. What are data logs?

Let's personalize your content