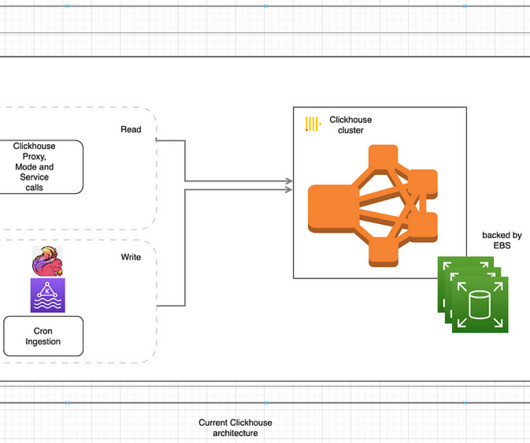

Comparing ClickHouse vs Rockset for Event and CDC Streams

Rockset

OCTOBER 4, 2022

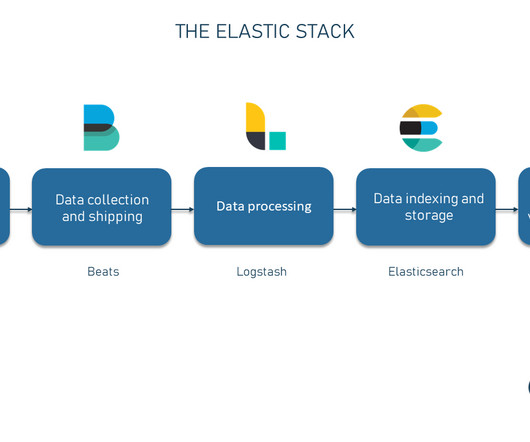

Streaming data feeds many real-time analytics applications, from logistics tracking to real-time personalization. Event streams, such as clickstreams, IoT data and other time series data, are common sources of data into these apps. ClickHouse has several storage engines that can pre-aggregate data.

Let's personalize your content