AWS Glue-Unleashing the Power of Serverless ETL Effortlessly

ProjectPro

JUNE 6, 2025

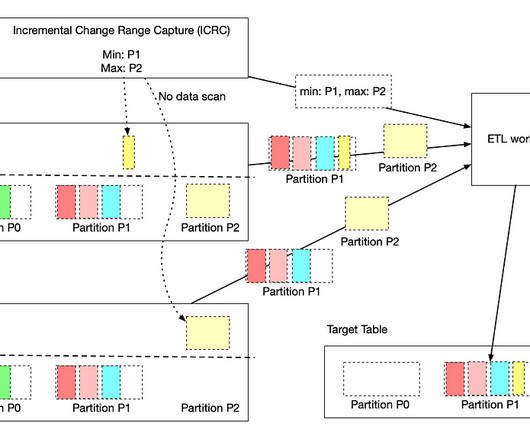

Application programming interfaces (APIs) are used to modify the retrieved data set for integration and to support users in keeping track of all the jobs. Users can schedule ETL jobs, and they can also choose the events that will trigger them. Then, Glue writes the job's metadata into the embedded AWS Glue Data Catalog.

Let's personalize your content