Data Ingestion-The Key to a Successful Data Engineering Project

ProjectPro

JUNE 6, 2025

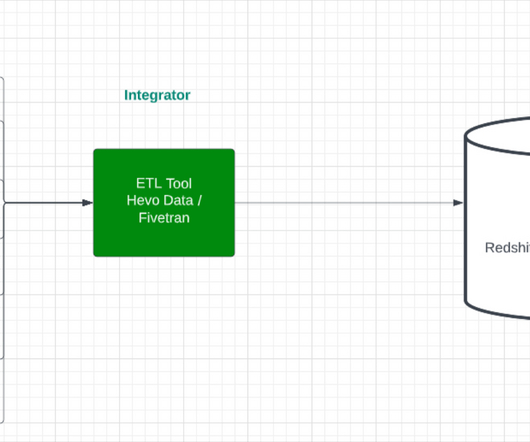

This influx of data and surging demand for fast-moving analytics has had more companies find ways to store and process data efficiently. This is where Data Engineers shine! Why do you need a Data Ingestion Layer in a Data Engineering Project? This is done by loading the aggregate data into a data warehouse.

Let's personalize your content