Open-Sourcing AvroTensorDataset: A Performant TensorFlow Dataset For Processing Avro Data

LinkedIn Engineering

JUNE 15, 2023

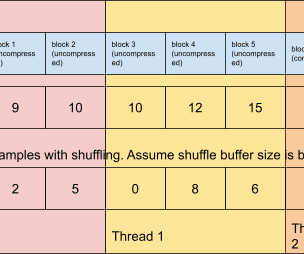

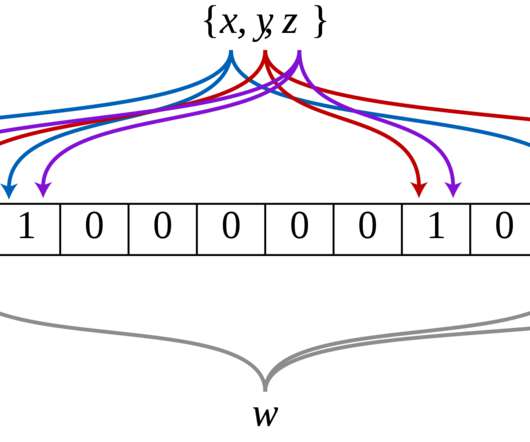

An Avro file is formatted with the following bytes: Figure 1: Avro file and data block byte layout The Avro file consists of four “magic” bytes, file metadata (including a schema, which all objects in this file must conform to), a 16-byte file-specific sync marker, and a sequence of data blocks separated by the file’s sync marker.

Let's personalize your content