Apache Spark Use Cases & Applications

Knowledge Hut

MAY 2, 2024

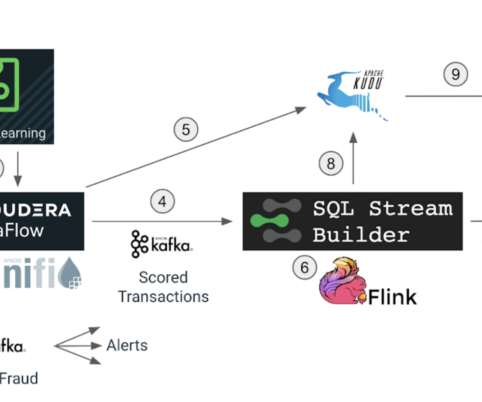

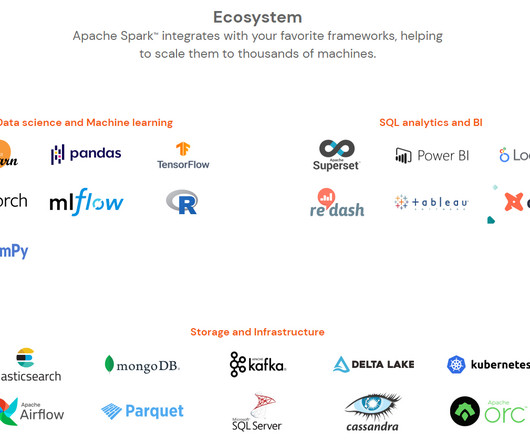

As per Apache, “ Apache Spark is a unified analytics engine for large-scale data processing ” Spark is a cluster computing framework, somewhat similar to MapReduce but has a lot more capabilities, features, speed and provides APIs for developers in many languages like Scala, Python, Java and R.

Let's personalize your content