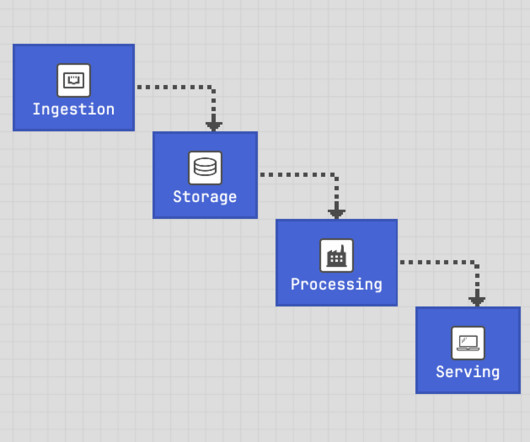

Building End-to-End Data Pipelines: From Data Ingestion to Analysis

KDnuggets

JULY 15, 2025

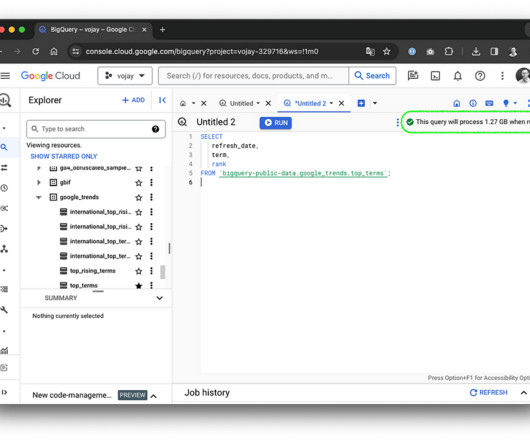

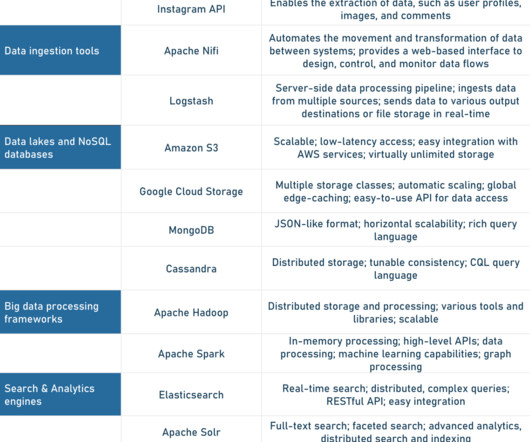

By Josep Ferrer , KDnuggets AI Content Specialist on July 15, 2025 in Data Science Image by Author Delivering the right data at the right time is a primary need for any organization in the data-driven society. Data can arrive in batches (hourly reports) or as real-time streams (live web traffic).

Let's personalize your content