Top 20+ Big Data Certifications and Courses in 2023

Knowledge Hut

SEPTEMBER 6, 2023

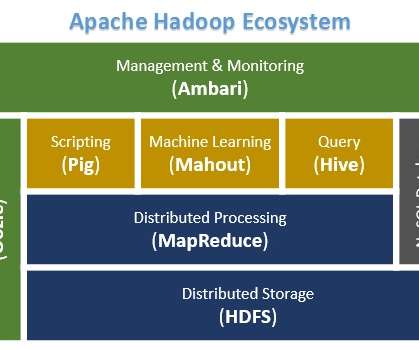

Data Analysis : Strong data analysis skills will help you define ways and strategies to transform data and extract useful insights from the data set. Big Data Frameworks : Familiarity with popular Big Data frameworks such as Hadoop, Apache Spark, Apache Flink, or Kafka are the tools used for data processing.

Let's personalize your content