Data Engineer Learning Path, Career Track & Roadmap for 2023

ProjectPro

JANUARY 19, 2022

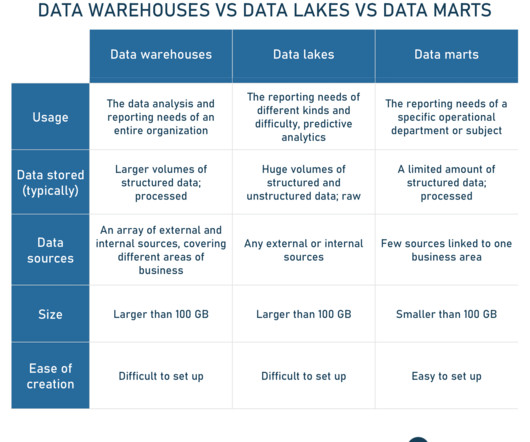

Knowledge of popular big data tools like Apache Spark, Apache Hadoop, etc. Good communication skills as a data engineer directly works with the different teams. Below, we mention a few popular databases and the different softwares used for them. and their implementation on the cloud is a must for data engineers.

Let's personalize your content