Best Data Processing Frameworks That You Must Know

Knowledge Hut

JANUARY 18, 2024

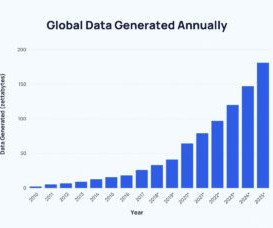

“Big data Analytics” is a phrase that was coined to refer to amounts of datasets that are so large traditional data processing software simply can’t manage them. For example, big data is used to pick out trends in economics, and those trends and patterns are used to predict what will happen in the future.

Let's personalize your content