Microsoft Fabric vs. Snowflake: Key Differences You Need to Know

Edureka

APRIL 22, 2025

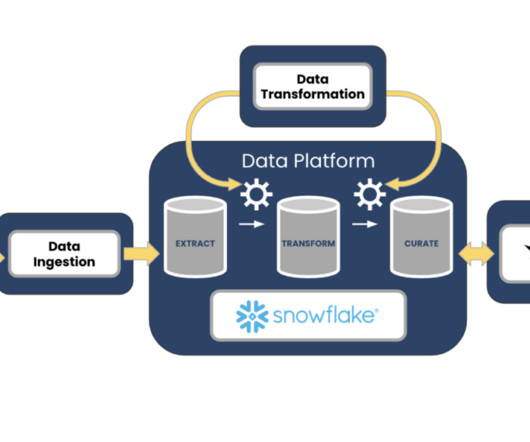

Its multi-cluster shared data architecture is one of its primary features. Additionally, Fabric has deep integrations with Power BI for visualization and Microsoft Purview for governance, resulting in a smooth experience for both business users and data professionals.

Let's personalize your content