How I Optimized Large-Scale Data Ingestion

databricks

SEPTEMBER 6, 2024

Explore being a PM intern at a technical powerhouse like Databricks, learning how to advance data ingestion tools to drive efficiency.

This site uses cookies to improve your experience. To help us insure we adhere to various privacy regulations, please select your country/region of residence. If you do not select a country, we will assume you are from the United States. Select your Cookie Settings or view our Privacy Policy and Terms of Use.

Cookies and similar technologies are used on this website for proper function of the website, for tracking performance analytics and for marketing purposes. We and some of our third-party providers may use cookie data for various purposes. Please review the cookie settings below and choose your preference.

Used for the proper function of the website

Used for monitoring website traffic and interactions

Cookies and similar technologies are used on this website for proper function of the website, for tracking performance analytics and for marketing purposes. We and some of our third-party providers may use cookie data for various purposes. Please review the cookie settings below and choose your preference.

databricks

SEPTEMBER 6, 2024

Explore being a PM intern at a technical powerhouse like Databricks, learning how to advance data ingestion tools to drive efficiency.

Netflix Tech

MARCH 7, 2023

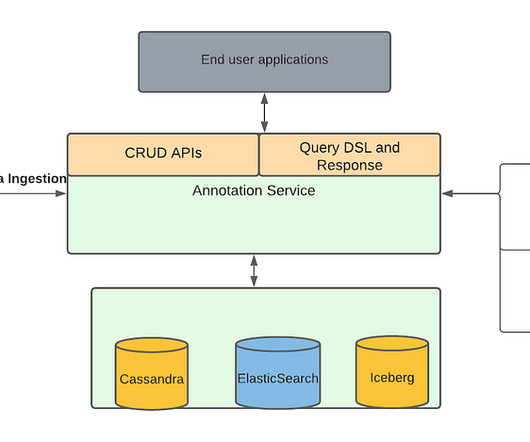

These media focused machine learning algorithms as well as other teams generate a lot of data from the media files, which we described in our previous blog , are stored as annotations in Marken. We refer the reader to our previous blog article for details. Marken Architecture Marken’s architecture diagram is as follows.

This site is protected by reCAPTCHA and the Google Privacy Policy and Terms of Service apply.

databricks

DECEMBER 10, 2024

Data engineering teams are frequently tasked with building bespoke ingestion solutions for myriad custom, proprietary, or industry-specific data sources. Many teams find that.

Cloudera

DECEMBER 4, 2024

For more than a decade, Cloudera has been an ardent supporter and committee member of Apache NiFi, long recognizing its power and versatility for data ingestion, transformation, and delivery. and discover how it can transform your data pipelines, watch this video.

Snowflake

MARCH 2, 2023

This solution is both scalable and reliable, as we have been able to effortlessly ingest upwards of 1GB/s throughput.” Rather than streaming data from source into cloud object stores then copying it to Snowflake, data is ingested directly into a Snowflake table to reduce architectural complexity and reduce end-to-end latency.

Databand.ai

JULY 19, 2023

Complete Guide to Data Ingestion: Types, Process, and Best Practices Helen Soloveichik July 19, 2023 What Is Data Ingestion? Data Ingestion is the process of obtaining, importing, and processing data for later use or storage in a database. In this article: Why Is Data Ingestion Important?

DataKitchen

NOVEMBER 5, 2024

You have typical data ingestion layer challenges in the bronze layer: lack of sufficient rows, delays, changes in schema, or more detailed structural/quality problems in the data. Data missing or incomplete at various stages is another critical quality issue in the Medallion architecture.

Snowflake

JUNE 13, 2024

But at Snowflake, we’re committed to making the first step the easiest — with seamless, cost-effective data ingestion to help bring your workloads into the AI Data Cloud with ease. Like any first step, data ingestion is a critical foundational block. Ingestion with Snowflake should feel like a breeze.

Rockset

MARCH 1, 2023

When you deconstruct the core database architecture, deep in the heart of it you will find a single component that is performing two distinct competing functions: real-time data ingestion and query serving. When data ingestion has a flash flood moment, your queries will slow down or time out making your application flaky.

Knowledge Hut

APRIL 25, 2023

An end-to-end Data Science pipeline starts from business discussion to delivering the product to the customers. One of the key components of this pipeline is Data ingestion. It helps in integrating data from multiple sources such as IoT, SaaS, on-premises, etc., What is Data Ingestion?

Team Data Science

MAY 10, 2020

I can now begin drafting my data ingestion/ streaming pipeline without being overwhelmed. With careful consideration and learning about your market, the choices you need to make become narrower and more clear.

Pinterest Engineering

APRIL 7, 2025

In this blog post, well discuss our experiences in identifying the challenges associated with EC2 network throttling. In the remainder of this blog post, well share how we root cause and mitigate the aboveissues. In the database service, the application reads data (e.g. 4xl with up to 12.5

Data Engineering Weekly

MARCH 5, 2025

This blog post expands on that insightful conversation, offering a critical look at Iceberg's potential and the hurdles organizations face when adopting it. Data ingestion tools often create numerous small files, which can degrade performance during query execution. What are your data governance and security requirements?

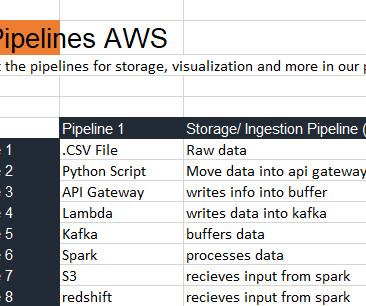

Hepta Analytics

FEBRUARY 14, 2022

DE Zoomcamp 2.2.1 – Introduction to Workflow Orchestration Following last weeks blog , we move to data ingestion. We already had a script that downloaded a csv file, processed the data and pushed the data to postgres database. This week, we got to think about our data ingestion design.

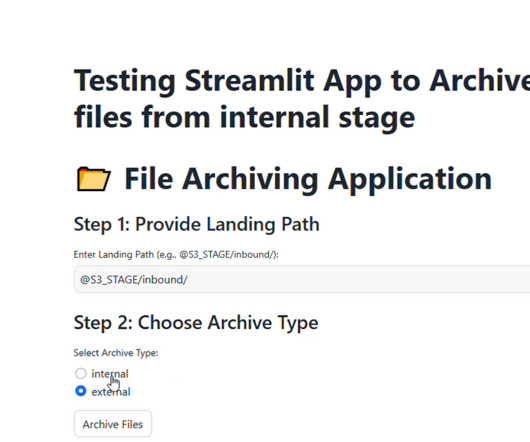

Cloudyard

DECEMBER 18, 2024

Handling feed files in data pipelines is a critical task for many organizations. These files, often stored in stages such as Amazon S3 or Snowflake internal stages, are the backbone of data ingestion workflows. Without a proper archival strategy, these files can clutter staging areas, leading to operational challenges.

Edureka

APRIL 9, 2025

Companies with expertise in Microsoft Fabric are in high demand, including Microsoft, Accenture, AWS, and Deloitte Are you prepared to influence the data-driven future? Let’s examine the requirements for becoming a Microsoft Fabric Engineer, starting with the knowledge and credentials discussed in this blog.

Data Engineering Weekly

APRIL 20, 2025

The blog took out the last edition’s recommendation on AI and summarized the current state of AI adoption in enterprises. The simplistic model expressed in the blog made it easy for me to reason about the transactional system design. Kafka is probably the most reliable data infrastructure in the modern data era.

Rockset

MAY 3, 2023

lower latency than Elasticsearch for streaming data ingestion. In this blog, we’ll walk through the benchmark framework, configuration and results. We’ll also delve under the hood of the two databases to better understand why their performance differs when it comes to search and analytics on high-velocity data streams.

Data Engineering Weekly

MARCH 23, 2025

[link] Georg Heiler: Upskilling data engineers What should I prefer for 2028, or how can I break into data engineering? I honestly don’t have a solid answer, but this blog is an excellent overview of upskilling. These are common LinkedIn requests.

databricks

MAY 23, 2024

We're excited to announce native support in Databricks for ingesting XML data. XML is a popular file format for representing complex data.

Cloudera

AUGUST 26, 2021

In addition to big data workloads, Ozone is also fully integrated with authorization and data governance providers namely Apache Ranger & Apache Atlas in the CDP stack. While we walk through the steps one by one from data ingestion to analysis, we will also demonstrate how Ozone can serve as an ‘S3’ compatible object store.

Rockset

OCTOBER 11, 2022

In this blog, we’ll compare and contrast how Elasticsearch and Rockset handle data ingestion as well as provide practical techniques for using these systems for real-time analytics. That’s because Elasticsearch can only write data to one index.

Cloudera

MAY 19, 2021

In the previous blog post in this series, we walked through the steps for leveraging Deep Learning in your Cloudera Machine Learning (CML) projects. Data Ingestion. The raw data is in a series of CSV files. We will firstly convert this to parquet format as most data lakes exist as object stores full of parquet files.

Snowflake

JUNE 4, 2024

To make it easier for you to have better visibility, control and optimization of your Snowflake spend, Snowflake recently added new capabilities to the generally available Cost Management Interface that you can learn more about in this blog. Getting data ingested now only takes a few clicks, and the data is encrypted.

Snowflake

OCTOBER 16, 2024

For organizations who are considering moving from a legacy data warehouse to Snowflake, are looking to learn more about how the AI Data Cloud can support legacy Hadoop use cases, or are struggling with a cloud data warehouse that just isn’t scaling anymore, it often helps to see how others have done it.

Cloudera

JANUARY 20, 2021

The missing chapter is not about point solutions or the maturity journey of use cases, the missing chapter is about the data, it’s always been about the data, and most importantly the journey data weaves from edge to artificial intelligence insight. . Conclusion.

Data Engineering Weekly

JULY 7, 2024

Experience Enterprise-Grade Apache Airflow Astro augments Airflow with enterprise-grade features to enhance productivity, meet scalability and availability demands across your data pipelines, and more. Hudi seems to be a de facto choice for CDC data lake features. Notion migrated the insert heavy workload from Snowflake to Hudi.

Cloudera

FEBRUARY 9, 2021

Cloudera Operational Database is now available in three different form-factors in Cloudera Data Platform (CDP). . If you are new to Cloudera Operational Database, see this blog post. In this blog post, we’ll look at both Apache HBase and Apache Phoenix concepts relevant to developing applications for Cloudera Operational Database.

Snowflake

APRIL 18, 2024

This blog post explores how Snowflake can help with this challenge. Legacy SIEM cost factors to keep in mind Data ingestion: Traditional SIEMs often impose limits to data ingestion and data retention. But what if security teams didn’t have to make tradeoffs?

Cloudera

FEBRUARY 8, 2021

This is part 2 in this blog series. You can read part 1, here: Digital Transformation is a Data Journey From Edge to Insight. The first blog introduced a mock connected vehicle manufacturing company, The Electric Car Company (ECC), to illustrate the manufacturing data path through the data lifecycle.

Striim

APRIL 18, 2025

Siloed storage : Critical business data is often locked away in disconnected databases, preventing a unified view. Delayed data ingestion : Batch processing delays insights, making real-time decision-making impossible.

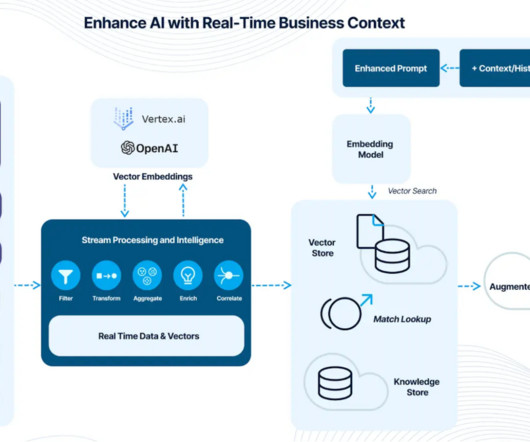

Striim

JANUARY 30, 2025

Systems must be capable of handling high-velocity data without bottlenecks. Addressing these challenges demands an end-to-end approach that integrates data ingestion, streaming analytics, AI governance, and security in a cohesive pipeline. As you can see, theres a lot to consider in adopting real-time AI.

Cloudera

FEBRUARY 8, 2021

Future connected vehicles will rely upon a complete data lifecycle approach to implement enterprise-level advanced analytics and machine learning enabling these advanced use cases that will ultimately lead to fully autonomous drive. This author is passionate about industry 4.0,

Cloudera

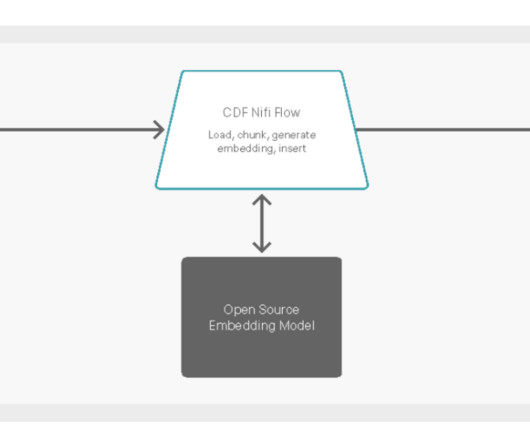

NOVEMBER 1, 2023

The connector makes it easy to update the LLM context by loading, chunking, generating embeddings, and inserting them into the Pinecone database as soon as new data is available. High-level overview of real-time data ingest with Cloudera DataFlow to Pinecone vector database.

Cloudera

AUGUST 4, 2021

Factors to be considered in when implementing a predictive maintenance solution: Complexity: Predictive maintenance platforms must enable real-time analytics on streaming data, ingesting, storing, and processing streaming data to instantly deliver insights.

Pinterest Engineering

NOVEMBER 7, 2023

Jeff Xiang | Software Engineer, Logging Platform Vahid Hashemian | Software Engineer, Logging Platform Jesus Zuniga | Software Engineer, Logging Platform At Pinterest, data is ingested and transported at petabyte scale every day, bringing inspiration for our users to create a life they love.

Confluent

FEBRUARY 6, 2019

The blog posts How to Build and Deploy Scalable Machine Learning in Production with Apache Kafka and Using Apache Kafka to Drive Cutting-Edge Machine Learning describe the benefits of leveraging the Apache Kafka ® ecosystem as a central, scalable and mission-critical nervous system. For now, we’ll focus on Kafka.

Cloudera

DECEMBER 14, 2021

The Roads and Transport Authority (RTA) operating in Dubai wanted to apply big data capabilities to transportation and enhance travel efficiency. For this, the RTA transformed its data ingestion and management processes. . The post AI and ML: No Longer the Stuff of Science Fiction appeared first on Cloudera Blog.

Data Engineering Podcast

JUNE 19, 2022

report having current investments in automation, 85% of data teams plan on investing in automation in the next 12 months. The Ascend Data Automation Cloud provides a unified platform for data ingestion, transformation, orchestration, and observability. In fact, while only 3.5% That’s where our friends at Ascend.io

Netflix Tech

JANUARY 25, 2023

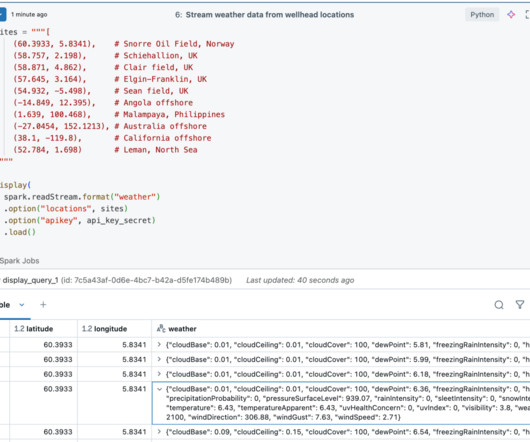

We will cover more details on Semantic Search support in a future blog article. To keep the latency low, we have to make sure that all the annotation indices are balanced, and hotspot is not created with any algorithm backfill data ingestion for the older movies. We support semantic search using Open Distro for ElasticSearch .

Christophe Blefari

MARCH 4, 2023

I'll try to think about it in the following weeks to understand where I go for the third year of the newsletter and the blog. After last week question about your consideration of a paying subscription I got a few feedbacks and it helped me a lot realise how you see the newsletter and what it means for a you. So thank you for that.

Cloudera

OCTOBER 11, 2021

Modak’s Nabu is a born in the cloud, cloud-neutral integrated data engineering platform designed to accelerate the journey of enterprises to the cloud. The platform converges data cataloging, data ingestion, data profiling, data tagging, data discovery, and data exploration into a unified platform, driven by metadata.

Cloudera

APRIL 9, 2021

This is part 4 in this blog series. This blog series follows the manufacturing and operations data lifecycle stages of an electric car manufacturer – typically experienced in large, data-driven manufacturing companies. The second blog dealt with creating and managing Data Enrichment pipelines.

Cloudera

AUGUST 11, 2022

Universal Data Distribution Solves DoD Data Transport Challenges. These requirements could be addressed by a Universal Data Distribution (UDD) architecture. UDD provides the capability to connect to any data source anywhere, with any structure, process it, and reliably deliver prioritized sensor data to any destination.

Expert insights. Personalized for you.

We have resent the email to

Are you sure you want to cancel your subscriptions?

Let's personalize your content