Spatial data science using ArcGIS Notebooks Blog 3: Data validation and data engineering

ArcGIS

MARCH 10, 2025

This is the third installment in a series of blog articles focused on using ArcGIS Notebooks to map gentrification in US cities.

This site uses cookies to improve your experience. To help us insure we adhere to various privacy regulations, please select your country/region of residence. If you do not select a country, we will assume you are from the United States. Select your Cookie Settings or view our Privacy Policy and Terms of Use.

Cookies and similar technologies are used on this website for proper function of the website, for tracking performance analytics and for marketing purposes. We and some of our third-party providers may use cookie data for various purposes. Please review the cookie settings below and choose your preference.

Used for the proper function of the website

Used for monitoring website traffic and interactions

Cookies and similar technologies are used on this website for proper function of the website, for tracking performance analytics and for marketing purposes. We and some of our third-party providers may use cookie data for various purposes. Please review the cookie settings below and choose your preference.

ArcGIS

MARCH 10, 2025

This is the third installment in a series of blog articles focused on using ArcGIS Notebooks to map gentrification in US cities.

ArcGIS

MARCH 10, 2025

This is the third installment in a series of blog articles focused on using ArcGIS Notebooks to map gentrification in US cities.

This site is protected by reCAPTCHA and the Google Privacy Policy and Terms of Service apply.

Agent Tooling: Connecting AI to Your Tools, Systems & Data

How to Modernize Manufacturing Without Losing Control

Mastering Apache Airflow® 3.0: What’s New (and What’s Next) for Data Orchestration

Cloudyard

APRIL 22, 2025

This is where “Snowpark Magic: Auto-Validate Your S3 to Snowflake Data Loads”comes into play a powerful approach to automate row-level validation between staged source files and their corresponding Snowflake tables, ensuring trust and integrity across your data pipelines.

DataKitchen

DECEMBER 6, 2024

Get the DataOps Advantage: Learn how to apply DataOps to monitor, iterate, and automate quality checkskeeping data quality high without slowing down. Practical Tools to Sprint Ahead: Dive into hands-on tips with open-source tools that supercharge data validation and observability. Read the popular blog article.

Monte Carlo

MARCH 24, 2023

The data doesn’t accurately represent the real heights of the animals, so it lacks validity. Let’s dive deeper into these two crucial concepts, both essential for maintaining high-quality data. Let’s dive deeper into these two crucial concepts, both essential for maintaining high-quality data. What Is Data Validity?

Monte Carlo

FEBRUARY 22, 2023

The annoying red notices you get when you sign up for something online saying things like “your password must contain at least one letter, one number, and one special character” are examples of data validity rules in action. It covers not just data validity, but many more data quality dimensions, too.

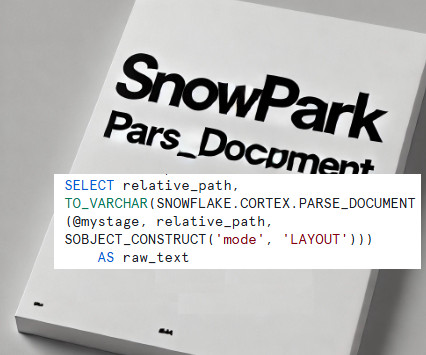

Cloudyard

JANUARY 15, 2025

However, Ive taken this a step further, leveraging Snowpark to extend its capabilities and build a complete data extraction process. This blog explores how you can leverage the power of PARSE_DOCUMENT with Snowpark, showcasing a use case to extract, clean, and process data from PDF documents. Why Use PARSE_DOC?

Edureka

APRIL 22, 2025

Data engineering can help with it. It is the force behind seamless data flow, enabling everything from AI-driven automation to real-time analytics. To stay competitive, businesses need to adapt to new trends and find new ways to deal with ongoing problems by taking advantage of new possibilities in data engineering.

Ascend.io

OCTOBER 28, 2024

Data transformation helps make sense of the chaos, acting as the bridge between unprocessed data and actionable intelligence. You might even think of effective data transformation like a powerful magnet that draws the needle from the stack, leaving the hay behind. This is crucial for maintaining data integrity and quality.

Picnic Engineering

FEBRUARY 6, 2025

In our previous blogs, we explored how Picnics Page Platform transformed the way we build new featuresenabling faster iteration, tighter collaboration and less feature-specific complexity. In this blog, well dive into how we configure the pages within Picnics store. Now, were bringing this same principle to our app.

Towards Data Science

JANUARY 7, 2024

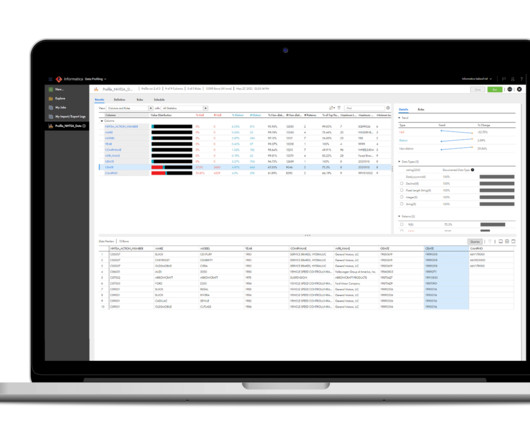

If the data changes over time, you might end up with results you didn’t expect, which is not good. To avoid this, we often use data profiling and data validation techniques. Data profiling gives us statistics about different columns in our dataset. It lets you log all sorts of data. So let’s dive in!

Tweag

MAY 16, 2023

To minimize the risk of misconfigurations, Nickel features (opt-in) static typing and contracts, a powerful and extensible data validation framework. For configuration data, we tend to use contracts. Contracts are a principled way of writing and applying runtime data validators.

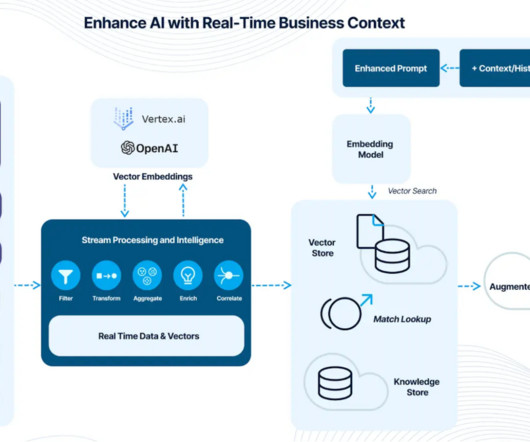

Striim

APRIL 18, 2025

How Striim Enables High-Quality, AI-Ready Data Striim helps organizations solve these challenges by ensuring real-time, clean, and continuously available data for AI and analytics. Process and clean data as it moves so AI and analytics work with trusted, high-quality inputs.

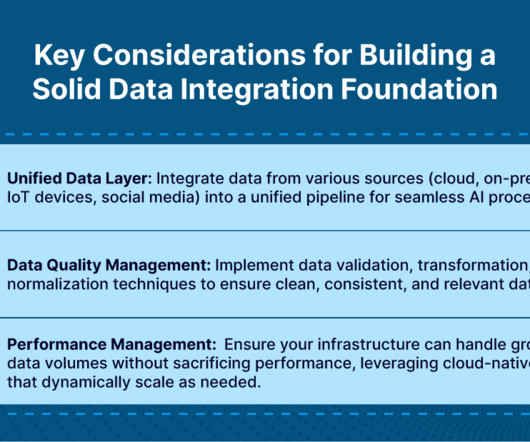

Ascend.io

NOVEMBER 14, 2024

By focusing on these attributes, data engineers can build pipelines that not only meet current demands but are also prepared for future challenges. In this blog post, we’ll explore key strategies for future-proofing your data pipelines. We’ll explore scalability, integration, security, and cost management.

Striim

JANUARY 17, 2025

To achieve accurate and reliable results, businesses need to ensure their data is clean, consistent, and relevant. This proves especially difficult when dealing with large volumes of high-velocity data from various sources.

Data Engineering Weekly

MARCH 31, 2024

My key highlight is that Excellent data documentation and “clean data” improve results. The blog further emphasizes its increased investment in Data Mesh and clean data. link] Databricks: PySpark in 2023 - A Year in Review Can we safely say PySpark killed Scala-based data pipelines?

Pinterest Engineering

SEPTEMBER 26, 2023

We have published a detailed blog post of its modeling architecture. Performance Validation We validate the auto-retraining quality at two places throughout the pipeline. The first place is the data validation, where we examine the features and labels and make sure there is no large shift in the distribution.

Data Engineering Weekly

MAY 16, 2023

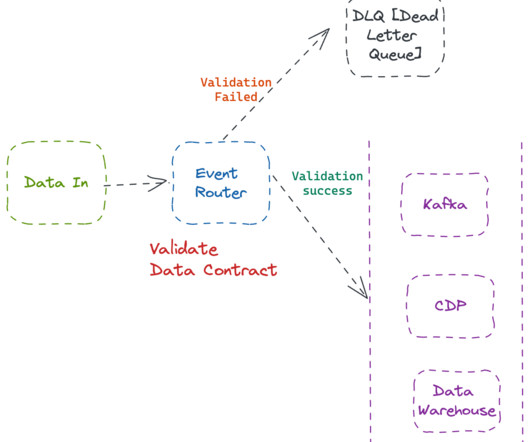

In the second part, we will focus on architectural patterns to implement data quality from a data contract perspective. Why is Data Quality Expensive? I won’t bore you with the importance of data quality in the blog. Data Testing vs. Why I’m making this claim?

Databand.ai

MAY 30, 2023

Here are several reasons data quality is critical for organizations: Informed decision making: Low-quality data can result in incomplete or incorrect information, which negatively affects an organization’s decision-making process. Introducing checks like format validation (e.g.,

Databand.ai

AUGUST 30, 2023

Accurate data ensures that these decisions and strategies are based on a solid foundation, minimizing the risk of negative consequences resulting from poor data quality. There are various ways to ensure data accuracy. Data cleansing involves identifying and correcting errors, inconsistencies, and inaccuracies in data sets.

Databand.ai

AUGUST 30, 2023

These tools play a vital role in data preparation, which involves cleaning, transforming, and enriching raw data before it can be used for analysis or machine learning models. There are several types of data testing tools. In this article: Why Are Data Testing Tools Important?

Databand.ai

AUGUST 30, 2023

It plays a critical role in ensuring that users of the data can trust the information they are accessing. There are several ways to ensure data consistency, including implementing data validation rules, using data standardization techniques, and employing data synchronization processes.

Databand.ai

AUGUST 30, 2023

Poor data quality can lead to incorrect or misleading insights, which can have significant consequences for an organization. DataOps tools help ensure data quality by providing features like data profiling, data validation, and data cleansing. In this article: Why Are DataOps Tools Important?

Databand.ai

AUGUST 30, 2023

These tools play a vital role in data preparation, which involves cleaning, transforming and enriching raw data before it can be used for analysis or machine learning models. There are several types of data testing tools. In this article: Why are data testing tools important?

Pinterest Engineering

NOVEMBER 28, 2023

Background The Goku-Ingestor is an asynchronous data processing pipeline that performs multiplexing of metrics data. To learn more about engineering at Pinterest, check out the rest of our Engineering Blog and visit our Pinterest Labs site. To explore and apply to open roles, visit our Careers page.

Data Engineering Weekly

SEPTEMBER 24, 2023

Thoughtworks: Measuring the Value of a Data Catalog The cost & effort value proportion for a Data Catalog implementation is always questionable in a large-scale data infrastructure. Thoughtworks, in combination with Adevinta, published a three-phase approach to measure the value of a data catalog.

Databand.ai

AUGUST 30, 2023

In this article: Why Is Data Testing Important? It’s also important during data migration and integration projects, where data is moved or transformed and must maintain its integrity. Data Validation Testing Data validation testing ensures that the data entered into the system meets the predefined rules and requirements.

Snowflake

NOVEMBER 30, 2023

And when moving to Snowflake , you get the advantage of the Data Cloud’s architectural benefits (flexibility, scalability and high performance) as well as availability across multiple cloud providers and global regions. Figure 2: Historical data migration using TCS Daezmo Data Migrator Tool.

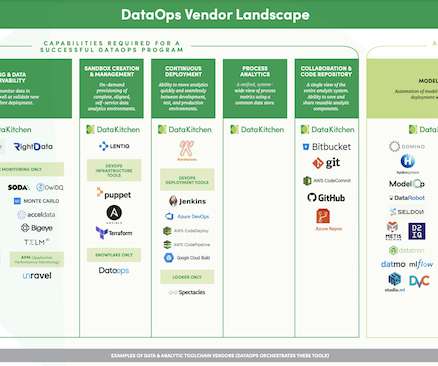

DataKitchen

APRIL 13, 2021

Read the complete blog below for a more detailed description of the vendors and their capabilities. This is not surprising given that DataOps enables enterprise data teams to generate significant business value from their data. Observe, optimize, and scale enterprise data pipelines. . DataOps is a hot topic in 2021.

Databand.ai

JULY 19, 2023

Despite these challenges, proper data acquisition is essential to ensure the data’s integrity and usefulness. Data Validation In this phase, the data that has been acquired is checked for accuracy and consistency.

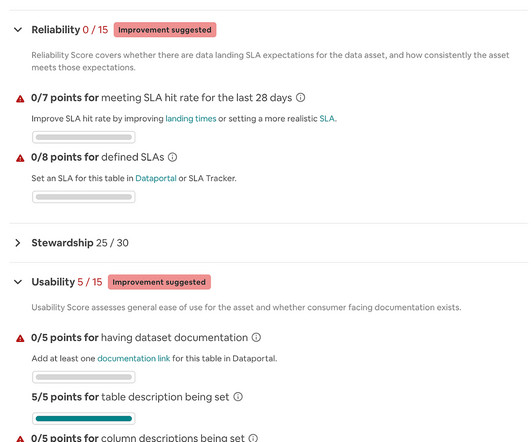

Airbnb Tech

NOVEMBER 28, 2023

However, for all of our uncertified data, which remained the majority of our offline data, we lacked visibility into its quality and didn’t have clear mechanisms for up-leveling it. How could we scale the hard-fought wins and best practices of Midas across our entire data warehouse?

DataKitchen

MAY 14, 2024

Chris will overview data at rest and in use, with Eric returning to demonstrate the practical steps in data testing for both states. Session 3: Mastering Data Testing in Development and Migration During our third session, the focus will shift towards regression and impact assessment in development cycles.

Databand.ai

AUGUST 30, 2023

Data Quality Rules Data quality rules are predefined criteria that your data must meet to ensure its accuracy, completeness, consistency, and reliability. These rules are essential for maintaining high-quality data and can be enforced using data validation, transformation, or cleansing processes.

Rockset

MARCH 1, 2023

In this release blog, I have just scratched the surface on the new cloud architecture for compute-compute separation. Building massive scale real-time applications do not need to incur exorbitant infrastructure costs due to resource overprovisioning.

Databand.ai

JULY 19, 2023

Since ELT involves storing raw data, it is essential to ensure that the data is of high quality and consistent. This can be achieved through data cleansing and data validation. Data cleansing involves removing duplicates, correcting errors, and standardizing data.

DataKitchen

FEBRUARY 23, 2024

Webinar: Beyond Data Observability: Personalization DataKitchen DataOps Observability Problem Statement White Paper: ‘Taming Chaos’ Technical Product Overview Four-minute online demo Detailed Product: Documentation Webinar: Data Observability Demo Day DataKitchen DataOps TestGen Problem Statement White Paper: ‘Mystery Box Full Of Data Errors’ (..)

Workfall

JANUARY 3, 2023

Reading Time: 6 minutes In our previous blog, we demonstrated How to Write Unit Tests for Angular 15 Application Using Jasmine and Enforce Code Quality in a CI Workflow With Github Actions. We looked at general unit tests involving components that receive data from services. appeared first on The Workfall Blog.

Databand.ai

JUNE 20, 2023

To achieve data integrity, organizations must implement various controls, processes, and technologies that help maintain the quality of data throughout its lifecycle. These measures include data validation, data cleansing, data integration, and data security, among others.

Databand.ai

AUGUST 30, 2023

This requires implementing robust data integration tools and practices, such as data validation, data cleansing, and metadata management. These practices help ensure that the data being ingested is accurate, complete, and consistent across all sources.

Databand.ai

JULY 11, 2023

Data Cleansing Data cleansing, also known as data scrubbing or data cleaning, is the process of identifying and correcting or removing errors, inconsistencies, and inaccuracies in data.

Scott Logic

SEPTEMBER 13, 2024

In this blog, we will look at how ML has developed, how it might affect our job as test engineers and the important strategies, considerations and skills needed to effectively evaluate and test ML models. Validate the Data: Ensure that the training, validation and testing datasets are represent real-world situations the model will face.

Edureka

AUGUST 2, 2023

Raw data, however, is frequently disorganised, unstructured, and challenging to work with directly. Data processing analysts can be useful in this situation. Let’s take a deep dive into the subject and look at what we’re about to study in this blog: Table of Contents What Is Data Processing Analysis?

Databand.ai

JULY 6, 2023

By routinely conducting data integrity tests, organizations can detect and resolve potential issues before they escalate, ensuring that their data remains reliable and trustworthy. Data integrity monitoring can include periodic data audits, automated data integrity checks, and real-time data validation.

Databand.ai

JUNE 21, 2023

By doing so, data integrity tools enable organizations to make better decisions based on accurate, trustworthy information. The three core functions of a data integrity tool are: Data validation: This process involves checking the data against predefined rules or criteria to ensure it meets specific standards.

Expert insights. Personalized for you.

We have resent the email to

Are you sure you want to cancel your subscriptions?

Let's personalize your content