Scaling Uber’s Apache Hadoop Distributed File System for Growth

Uber Engineering

APRIL 5, 2018

Three years ago, Uber Engineering adopted Hadoop as the storage ( HDFS ) and compute ( YARN ) infrastructure for our organization’s big data analysis.

This site uses cookies to improve your experience. To help us insure we adhere to various privacy regulations, please select your country/region of residence. If you do not select a country, we will assume you are from the United States. Select your Cookie Settings or view our Privacy Policy and Terms of Use.

Cookies and similar technologies are used on this website for proper function of the website, for tracking performance analytics and for marketing purposes. We and some of our third-party providers may use cookie data for various purposes. Please review the cookie settings below and choose your preference.

Used for the proper function of the website

Used for monitoring website traffic and interactions

Cookies and similar technologies are used on this website for proper function of the website, for tracking performance analytics and for marketing purposes. We and some of our third-party providers may use cookie data for various purposes. Please review the cookie settings below and choose your preference.

Uber Engineering

APRIL 5, 2018

Three years ago, Uber Engineering adopted Hadoop as the storage ( HDFS ) and compute ( YARN ) infrastructure for our organization’s big data analysis.

Data Engineering Weekly

MARCH 5, 2025

But is it truly revolutionary, or is it destined to repeat the pitfalls of past solutions like Hadoop? Danny authored a thought-provoking article comparing Iceberg to Hadoop , not on a purely technical level, but in terms of their hype cycles, implementation challenges, and the surrounding ecosystems.

This site is protected by reCAPTCHA and the Google Privacy Policy and Terms of Service apply.

Agent Tooling: Connecting AI to Your Tools, Systems & Data

How to Modernize Manufacturing Without Losing Control

Mastering Apache Airflow® 3.0: What’s New (and What’s Next) for Data Orchestration

Snowflake

NOVEMBER 26, 2024

For organizations considering moving from a legacy data warehouse to Snowflake, looking to learn more about how the AI Data Cloud can support legacy Hadoop use cases, or assessing new options if your current cloud data warehouse just isn’t scaling anymore, it helps to see how others have done it.

phData: Data Engineering

NOVEMBER 8, 2024

The world we live in today presents larger datasets, more complex data, and diverse needs, all of which call for efficient, scalable data systems. In this blog, we will discuss: What is the Open Table format (OTF)? These systems are built on open standards and offer immense analytical and transactional processing flexibility.

Cloudera

NOVEMBER 7, 2023

Apache Ozone is compatible with Amazon S3 and Hadoop FileSystem protocols and provides bucket layouts that are optimized for both Object Store and File system semantics. Bucket layouts provide a single Ozone cluster with the capabilities of both a Hadoop Compatible File System (HCFS) and Object Store (like Amazon S3).

Cloudera

SEPTEMBER 15, 2022

It was designed as a native object store to provide extreme scale, performance, and reliability to handle multiple analytics workloads using either S3 API or the traditional Hadoop API. In this blog post, we will talk about a single Ozone cluster with the capabilities of both Hadoop Core File System (HCFS) and Object Store (like Amazon S3).

Cloudera

MAY 18, 2021

Prior the introduction of CDP Public Cloud, many organizations that wanted to leverage CDH, HDP or any other on-prem Hadoop runtime in the public cloud had to deploy the platform in a lift-and-shift fashion, commonly known as “Hadoop-on-IaaS” or simply the IaaS model. Introduction.

Cloudera

DECEMBER 7, 2020

Apache Hadoop Distributed File System (HDFS) is the most popular file system in the big data world. The Apache Hadoop File System interface has provided integration to many other popular storage systems like Apache Ozone, S3, Azure Data Lake Storage etc. There are two challenges with the View File System.

Cloudera

AUGUST 26, 2021

Ozone natively provides Amazon S3 and Hadoop Filesystem compatible endpoints in addition to its own native object store API endpoint and is designed to work seamlessly with enterprise scale data warehousing, machine learning and streaming workloads. Ozone Namespace Overview. STORED AS TEXTFILE. and Cloudera Manager version 7.4.4.

Cloudera

MAY 26, 2021

Advanced threat detection – real-time monitoring of access events to identify changes in behavior on a user level, data asset level, or across systems. log4j.appender.RANGER_AUDIT.File=/var/log/hadoop-hdfs/ranger-hdfs-audit.log. The post Auditing to external systems in CDP Private Cloud Base appeared first on Cloudera Blog.

Striim

MARCH 21, 2025

This blog post describes the advantages of real-time ETL and how it increases the value gained from Snowflake implementations. If you have Snowflake or are considering it, now is the time to think about your ETL for Snowflake.

Cloudera

JUNE 13, 2024

The first time that I really became familiar with this term was at Hadoop World in New York City some ten or so years ago. But, let’s make one thing clear – we are no longer that Hadoop company. But, What Happened to Hadoop? This was the gold rush of the 21st century, except the gold was data. We hope to see you there.

Pinterest Engineering

JULY 25, 2023

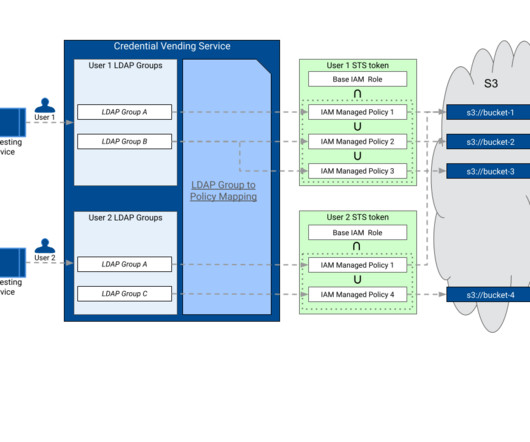

In this post, we focus on how we enhanced and extended Monarch , Pinterest’s Hadoop based batch processing system, with FGAC capabilities. When building an alternative solution, we shifted our focus from a host-centric system to one that focuses on access control on a per-user basis. We achieved this by creating LDAP groups.

Christophe Blefari

JANUARY 20, 2024

Hadoop initially led the way with Big Data and distributed computing on-premise to finally land on Modern Data Stack — in the cloud — with a data warehouse at the center. In order to understand today's data engineering I think that this is important to at least know Hadoop concepts and context and computer science basics.

ProjectPro

JANUARY 12, 2016

Choosing the right Hadoop Distribution for your enterprise is a very important decision, whether you have been using Hadoop for a while or you are a newbie to the framework. Different Classes of Users who require Hadoop- Professionals who are learning Hadoop might need a temporary Hadoop deployment.

Knowledge Hut

DECEMBER 28, 2023

That's where Hadoop comes into the picture. Hadoop is a popular open-source framework that stores and processes large datasets in a distributed manner. Organizations are increasingly interested in Hadoop to gain insights and a competitive advantage from their massive datasets. Why Are Hadoop Projects So Important?

ProjectPro

SEPTEMBER 11, 2015

Hadoop has now been around for quite some time. But this question has always been present as to whether it is beneficial to learn Hadoop, the career prospects in this field and what are the pre-requisites to learn Hadoop? The availability of skilled big data Hadoop talent will directly impact the market.

Data Engineering Podcast

NOVEMBER 22, 2017

To help other people find the show you can leave a review on iTunes , or Google Play Music , and tell your friends and co-workers This is your host Tobias Macey and today I’m interviewing Julien Le Dem and Doug Cutting about data serialization formats and how to pick the right one for your systems.

Knowledge Hut

APRIL 25, 2024

In this blog post, we will discuss such technologies. If you pursue the MSc big data technologies course, you will be able to specialize in topics such as Big Data Analytics, Business Analytics, Machine Learning, Hadoop and Spark technologies, Cloud Systems etc. Spark is a fast and general-purpose cluster computing system.

Cloudera

JUNE 2, 2021

Apache Ozone is a distributed object store built on top of Hadoop Distributed Data Store service. It can manage billions of small and large files that are difficult to handle by other distributed file systems. For details of Ozone Security, please refer to our early blog [1]. ozone.scm.db.dirs= /var/lib/hadoop-ozone/scm/data.

ProjectPro

JUNE 14, 2017

Hadoop was first made publicly available as an open source in 2011, since then it has undergone major changes in three different versions. Apache Hadoop 3 is round the corner with members of the Hadoop community at Apache Software Foundation still testing it. The major release of Hadoop 3.x x vs. Hadoop 3.x

Cloudera

OCTOBER 15, 2021

Apache Ozone has added a new feature called File System Optimization (“FSO”) in HDDS-2939. The FSO feature provides file system semantics (hierarchical namespace) efficiently while retaining the inherent scalability of an object store. which contains Hadoop 3.1.1, We enabled Apache Ozone’s FSO feature for the benchmarking tests.

Cloudera

DECEMBER 14, 2017

The Apache Hadoop community recently released version 3.0.0 GA , the third major release in Hadoop’s 10-year history at the Apache Software Foundation. alpha2 on the Cloudera Engineering blog, and 3.0.0 Improved support for cloud storage systems like S3 (with S3Guard ), Microsoft Azure Data Lake, and Aliyun OSS.

Data Engineering Podcast

FEBRUARY 11, 2018

In your blog post that explains the design decisions for how Timescale is implemented you call out the fact that the inserted data is largely append only which simplifies the index management. Is timescale compatible with systems such as Amazon RDS or Google Cloud SQL? What impact has the 10.0

Cloudera

FEBRUARY 8, 2022

Cloudera has been recognized as a Visionary in 2021 Gartner® Magic Quadrant for Cloud Database Management Systems (DBMS) and for the first time, evaluated CDP Operational Database (COD) against the 12 critical capabilities for Operational Databases. It doesn’t require Hadoop admin expertise to set up the database.

ProjectPro

MARCH 23, 2016

We shouldn’t be trying for bigger computers, but for more systems of computers.” In reference to Big Data) Developers of Google had taken this quote seriously, when they first published their research paper on GFS (Google File System) in 2003. Yes, Doug Cutting named Hadoop framework after his son’s tiny toy elephant.

The Modern Data Company

FEBRUARY 28, 2023

This blog post will discuss some of the common causes, which have nothing to do with technology and everything to do with poor planning. At the start of the big data era in the early 2010’s, implementing Hadoop was considered a prime resume builder. Similarly, a data operating system won’t magically fix broken processes.

Snowflake

OCTOBER 16, 2024

For organizations who are considering moving from a legacy data warehouse to Snowflake, are looking to learn more about how the AI Data Cloud can support legacy Hadoop use cases, or are struggling with a cloud data warehouse that just isn’t scaling anymore, it often helps to see how others have done it.

Cloudera

JULY 15, 2021

This blog post provides an overview of best practice for the design and deployment of clusters incorporating hardware and operating system configuration, along with guidance for networking and security as well as integration with existing enterprise infrastructure. Operating System Disk Layouts.

Cloudera

APRIL 22, 2021

on Cisco UCS S3260 M5 Rack Server with Apache Ozone as the distributed file system for CDP. It works by writing synthetic file system entries directly into Ozone’s OM, SCM, and DataNode RocksDB, and then writing fake data block files on DataNodes. Cloudera will publish separate blog posts with results of performance benchmarks.

Cloudera

SEPTEMBER 7, 2022

As I look forward to the next decade of transformation, I see that innovating in open source will accelerate along three dimensions — project, architectural, and system. System innovation is the next evolutionary step for open source. System innovation. This is where system innovation steps in. Project-level innovation.

ProjectPro

SEPTEMBER 14, 2016

A lot of people who wish to learn hadoop have several questions regarding a hadoop developer job role - What are typical tasks for a Hadoop developer? How much java coding is involved in hadoop development job ? What day to day activities does a hadoop developer do? Table of Contents Who is a Hadoop Developer?

Snowflake

JULY 22, 2024

In this blog, we offer guidance for leveraging Snowflake’s capabilities around data and AI to build apps and unlock innovation. Determining an architecture and a scalable data model to integrate more source systems in the future. Figure 1: Drivers and success criteria for data platform initiatives.

ProjectPro

MAY 19, 2015

It is possible today for organizations to store all the data generated by their business at an affordable price-all thanks to Hadoop, the Sirius star in the cluster of million stars. With Hadoop, even the impossible things look so trivial. So the big question is how is learning Hadoop helpful to you as an individual?

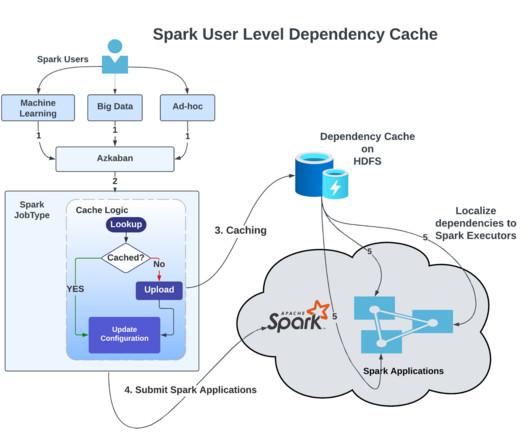

LinkedIn Engineering

MARCH 9, 2023

We execute nearly 100,000 Spark applications daily in our Apache Hadoop YARN (more on how we scaled YARN clusters here ). Every day, we upload nearly 30 million dependencies to the Apache Hadoop Distributed File System (HDFS) to run Spark applications. Conclusion Our project aligns with the " doing more with less " philosophy.

ProjectPro

MARCH 23, 2015

In one of our previous articles we had discussed about Hadoop 2.0 YARN framework and how the responsibility of managing the Hadoop cluster is shifting from MapReduce towards YARN. In one of our previous articles we had discussed about Hadoop 2.0 Here we will highlight the feature - high availability in Hadoop 2.0

Robinhood

FEBRUARY 7, 2024

Together, we are building products and services that help create a financial system everyone can participate in. When dealing with large-scale data, we turn to batch processing with distributed systems to complete high-volume jobs. Authored by: Grace L., and Sreeram R.

Data Engineering Podcast

MARCH 22, 2021

For analytical systems, the only way to provide this reliably is by implementing change data capture (CDC). Unfortunately, this is a non-trivial undertaking, particularly for teams that don’t have extensive experience working with streaming data and complex distributed systems. What are the alternatives to CDC?

Maxime Beauchemin

JANUARY 20, 2017

This discipline also integrates specialization around the operation of so called “big data” distributed systems, along with concepts around the extended Hadoop ecosystem, stream processing, and in computation at scale. This includes tasks like setting up and operating platforms like Hadoop/Hive/HBase, Spark, and the like.

Knowledge Hut

DECEMBER 26, 2023

They are required to have deep knowledge of distributed systems and computer science. Building data systems and pipelines Data pipelines refer to the design systems used to capture, clean, transform and route data to different destination systems, which data scientists can later use to analyze and gain information.

Data Engineering Weekly

JUNE 2, 2024

Workflow Optimization : Decomposing complex tasks into smaller, manageable steps and prioritizing deterministic workflows can enhance the reliability and performance of LLM-based systems. link] Solmaz Shahalizadeh: How to get more out of your startup’s data strategy Data is always an afterthought in many organizations.

ProjectPro

JUNE 30, 2016

This blog post gives an overview on the big data analytics job market growth in India which will help the readers understand the current trends in big data and hadoop jobs and the big salaries companies are willing to shell out to hire expert Hadoop developers. It’s raining jobs for Hadoop skills in India.

ProjectPro

AUGUST 18, 2016

To begin your big data career, it is more a necessity than an option to have a Hadoop Certification from one of the popular Hadoop vendors like Cloudera, MapR or Hortonworks. Quite a few Hadoop job openings mention specific Hadoop certifications like Cloudera or MapR or Hortonworks, IBM, etc. as a job requirement.

LinkedIn Engineering

DECEMBER 19, 2023

Co-authors: Arjun Mohnot , Jenchang Ho , Anthony Quigley , Xing Lin , Anil Alluri , Michael Kuchenbecker LinkedIn operates one of the world’s largest Apache Hadoop big data clusters. Historically, deploying code changes to Hadoop big data clusters has been complex.

Expert insights. Personalized for you.

We have resent the email to

Are you sure you want to cancel your subscriptions?

Let's personalize your content