Reducing The Barrier To Entry For Building Stream Processing Applications With Decodable

Data Engineering Podcast

OCTOBER 15, 2023

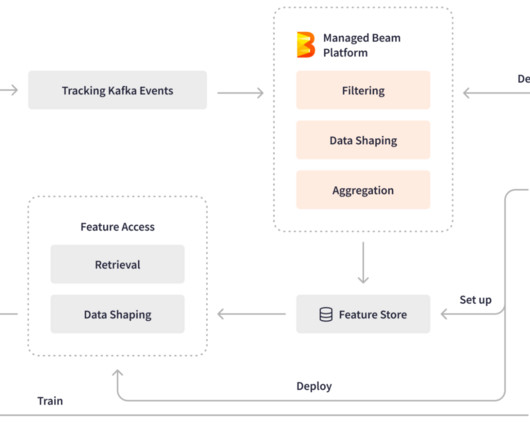

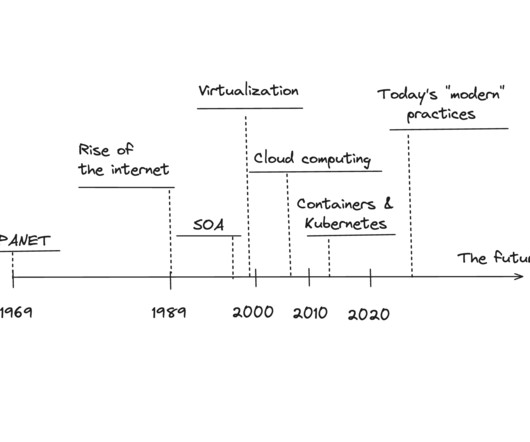

Summary Building streaming applications has gotten substantially easier over the past several years. Despite this, it is still operationally challenging to deploy and maintain your own stream processing infrastructure. What was the process for adding full Java support in addition to SQL?

Let's personalize your content