Streaming Data from the Universe with Apache Kafka

Confluent

JUNE 13, 2019

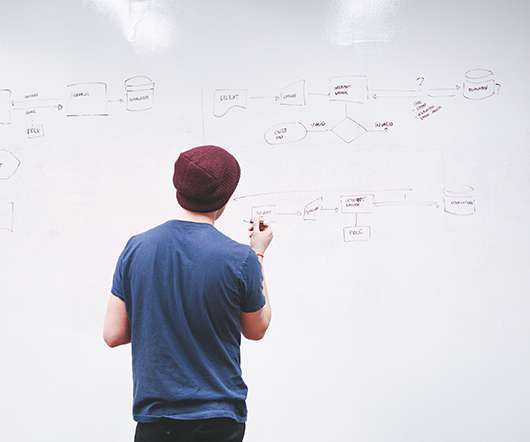

Much of the code used by modern astronomers is written in Python, so the ZTF alert distribution system endpoints need to at least support Python. We built our alert distribution code in Python, based around Confluent’s Python client for Apache Kafka. Alert data pipeline and system design.

Let's personalize your content