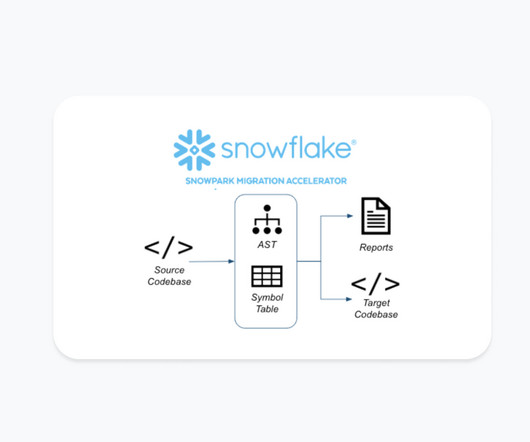

Modern Data Engineering: Free Spark to Snowpark Migration Accelerator for Faster, Cheaper Pipelines in Snowflake

Snowflake

JUNE 20, 2024

Designed for processing large data sets, Spark has been a popular solution, yet it is one that can be challenging to manage, especially for users who are new to big data processing or distributed systems. No source data is ever analyzed by the tool (code is the only input), and it does not connect to any source platform.

Let's personalize your content