Fault Tolerance in Distributed Systems: Tracing with Apache Kafka and Jaeger

Confluent

JULY 24, 2019

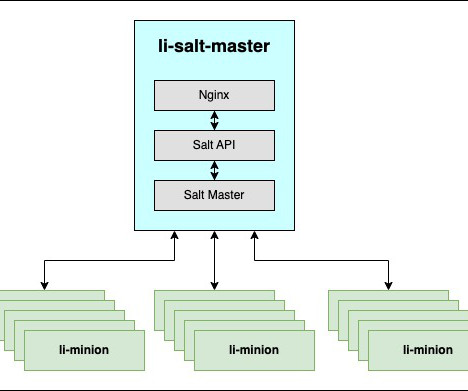

Using Jaeger tracing, I’ve been able to answer an important question that nearly every Apache Kafka ® project that I’ve worked on posed: how is data flowing through my distributed system? Distributed tracing with Apache Kafka and Jaeger. Example of a Kafka project with Jaeger tracing. What does this all mean?

Let's personalize your content