Introducing Netflix’s Key-Value Data Abstraction Layer

Netflix Tech

SEPTEMBER 18, 2024

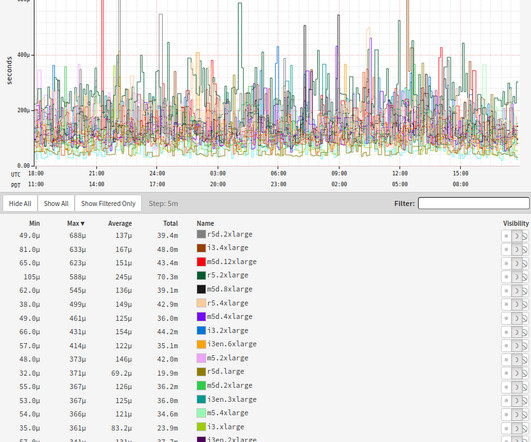

Central to this infrastructure is our use of multiple online distributed databases such as Apache Cassandra , a NoSQL database known for its high availability and scalability. The first level is a hashed string ID (the primary key), and the second level is a sorted map of a key-value pair of bytes. number of chunks).

Let's personalize your content