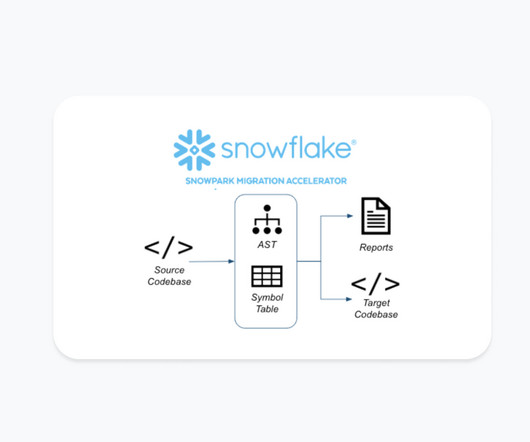

Modern Data Engineering: Free Spark to Snowpark Migration Accelerator for Faster, Cheaper Pipelines in Snowflake

Snowflake

JUNE 20, 2024

With familiar DataFrame-style programming and custom code execution, Snowpark lets teams process their data in Snowflake using Python and other programming languages by automatically handling scaling and performance tuning. Snowflake customers see an average of 4.6x faster performance and 35% cost savings with Snowpark over managed Spark.

Let's personalize your content