ETL vs. ELT and the Evolution of Data Integration Techniques

Ascend.io

DECEMBER 14, 2022

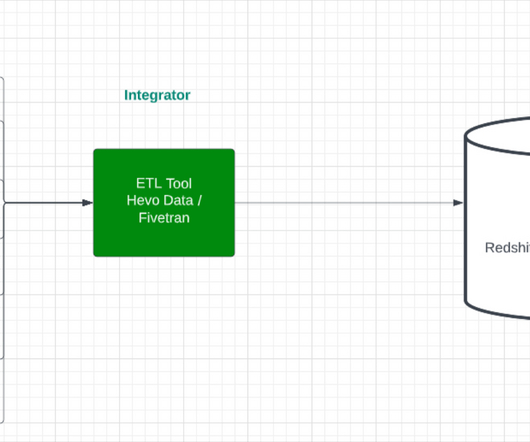

How ETL Became Outdated The ETL process (extract, transform, and load) is a data consolidation technique in which data is extracted from one source, transformed, and then loaded into a target destination. Optimized for Decision-Making Modern warehouses are columnar and designed for storing and analyzing big datasets.

Let's personalize your content