Setting up Data Lake on GCP using Cloud Storage and BigQuery

Analytics Vidhya

FEBRUARY 25, 2023

The need for a data lake arises from the growing volume, variety, and velocity of data companies need to manage and analyze.

This site uses cookies to improve your experience. To help us insure we adhere to various privacy regulations, please select your country/region of residence. If you do not select a country, we will assume you are from the United States. Select your Cookie Settings or view our Privacy Policy and Terms of Use.

Cookies and similar technologies are used on this website for proper function of the website, for tracking performance analytics and for marketing purposes. We and some of our third-party providers may use cookie data for various purposes. Please review the cookie settings below and choose your preference.

Used for the proper function of the website

Used for monitoring website traffic and interactions

Cookies and similar technologies are used on this website for proper function of the website, for tracking performance analytics and for marketing purposes. We and some of our third-party providers may use cookie data for various purposes. Please review the cookie settings below and choose your preference.

Analytics Vidhya

FEBRUARY 25, 2023

The need for a data lake arises from the growing volume, variety, and velocity of data companies need to manage and analyze.

Start Data Engineering

AUGUST 17, 2021

Batch Data Pipelines 1.1 Process => Data Warehouse 1.2 Process => Cloud Storage => Data Warehouse 2. Near Real-Time Data pipelines 2.1 Data Stream => Consumer => Data Warehouse 2.2

This site is protected by reCAPTCHA and the Google Privacy Policy and Terms of Service apply.

Agent Tooling: Connecting AI to Your Tools, Systems & Data

How to Modernize Manufacturing Without Losing Control

Mastering Apache Airflow® 3.0: What’s New (and What’s Next) for Data Orchestration

Snowflake

APRIL 2, 2025

Data storage has been evolving, from databases to data warehouses and expansive data lakes, with each architecture responding to different business and data needs. Traditional databases excelled at structured data and transactional workloads but struggled with performance at scale as data volumes grew.

Cloudera

SEPTEMBER 29, 2020

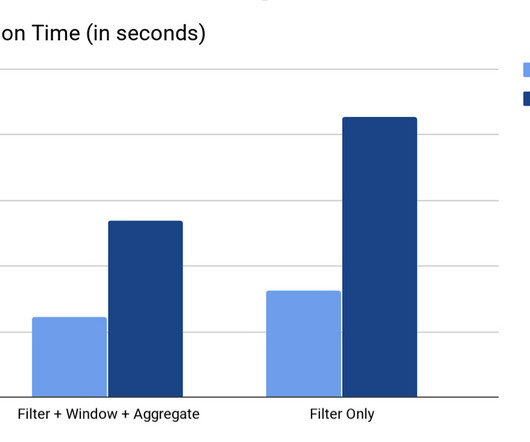

Performance is one of the key, if not the most important deciding criterion, in choosing a Cloud Data Warehouse service. In today’s fast changing world, enterprises have to make data driven decisions quickly and for that they rely heavily on their data warehouse service. . benchmark.

Towards Data Science

MARCH 6, 2023

And that’s the target of today’s post — We’ll be developing a data pipeline using Apache Spark, Google Cloud Storage, and Google Big Query (using the free tier) not sponsored. The tools Spark is an all-purpose distributed memory-based data processing framework geared towards processing extremely large amounts of data.

Data Engineering Podcast

FEBRUARY 18, 2024

Summary A data lakehouse is intended to combine the benefits of data lakes (cost effective, scalable storage and compute) and data warehouses (user friendly SQL interface). Multiple open source projects and vendors have been working together to make this vision a reality.

Cloudera

SEPTEMBER 10, 2021

Shared Data Experience ( SDX ) on Cloudera Data Platform ( CDP ) enables centralized data access control and audit for workloads in the Enterprise Data Cloud. The public cloud (CDP-PC) editions default to using cloud storage (S3 for AWS, ADLS-gen2 for Azure).

Cloudera

FEBRUARY 9, 2021

Today’s customers have a growing need for a faster end to end data ingestion to meet the expected speed of insights and overall business demand. This ‘need for speed’ drives a rethink on building a more modern data warehouse solution, one that balances speed with platform cost management, performance, and reliability.

phData: Data Engineering

NOVEMBER 8, 2024

Versioning also ensures a safer experimentation environment, where data scientists can test new models or hypotheses on historical data snapshots without impacting live data. Note : Cloud Data warehouses like Snowflake and Big Query already have a default time travel feature. FAQs What is a Data Lakehouse?

Edureka

APRIL 22, 2025

Fabric is meant for organizations looking for a single pane of glass across their data estate with seamless integration and a low learning curve for Microsoft users. Snowflake is a cloud-native platform for data warehouses that prioritizes collaboration, scalability, and performance. Office 365, Power BI, Azure).

Monte Carlo

FEBRUARY 6, 2023

So, you’re planning a cloud data warehouse migration. But be warned, a warehouse migration isn’t for the faint of heart. As you probably already know if you’re reading this, a data warehouse migration is the process of moving data from one warehouse to another. A worthy quest to be sure.

Striim

MARCH 21, 2025

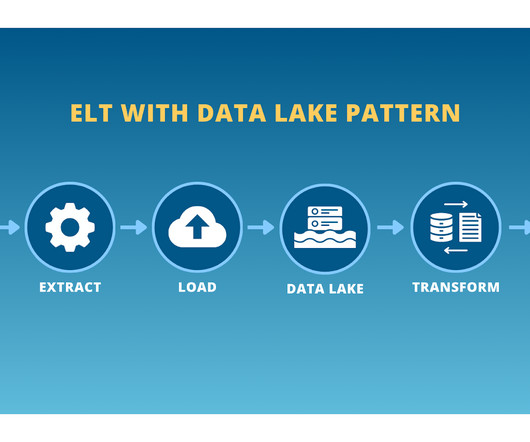

Batch processing: data is typically extracted from databases at the end of the day, saved to disk for transformation, and then loaded in batch to a data warehouse. Batch data integration is useful for data that isn’t extremely time-sensitive. Electric bills are a relevant example.

U-Next

SEPTEMBER 7, 2022

The terms “ Data Warehouse ” and “ Data Lake ” may have confused you, and you have some questions. There are times when the data is structured , but it is often messy since it is ingested directly from the data source. What is Data Warehouse? . Data Warehouse in DBMS: .

Cloudera

SEPTEMBER 9, 2018

We are proud to announce the general availability of Cloudera Altus Data Warehouse , the only cloud data warehousing service that brings the warehouse to the data. Modern data warehousing for the cloud. Modern data warehousing for the cloud.

dbt Developer Hub

NOVEMBER 22, 2022

Once your data warehouse is built out, the vast majority of your data will have come from other SaaS tools, internal databases, or customer data platforms (CDPs). Spreadsheets are the Swiss army knife of data processing. But there’s another unsung hero of the analytics engineering toolkit: the humble spreadsheet.

Cloudera

JANUARY 21, 2021

While cloud-native, point-solution data warehouse services may serve your immediate business needs, there are dangers to the corporation as a whole when you do your own IT this way. Cloudera Data Warehouse (CDW) is here to save the day! CDP is Cloudera’s new hybrid cloud, multi-function data platform.

ProjectPro

AUGUST 11, 2021

“Data Lake vs Data Warehouse = Load First, Think Later vs Think First, Load Later” The terms data lake and data warehouse are frequently stumbled upon when it comes to storing large volumes of data. Data Warehouse Architecture What is a Data lake?

Rockset

MARCH 1, 2023

If such query workloads create additional data lags then it will actively cause more harm by increasing your blind spot at the exact wrong time, the time when fraud is being perpetrated. OLTP databases aren’t built to ingest massive volumes of data streams and perform stream processing on incoming datasets.

Monte Carlo

AUGUST 25, 2023

Different vendors offering data warehouses, data lakes, and now data lakehouses all offer their own distinct advantages and disadvantages for data teams to consider. So let’s get to the bottom of the big question: what kind of data storage layer will provide the strongest foundation for your data platform?

Data Engineering Podcast

SEPTEMBER 22, 2019

Summary Object storage is quickly becoming the unifying layer for data intensive applications and analytics. Modern, cloud oriented data warehouses and data lakes both rely on the durability and ease of use that it provides. How do you approach project governance and sustainability?

Towards Data Science

NOVEMBER 4, 2023

Often it is a data warehouse solution (DWH) in the central part of our infrastructure. Data warehouse exmaple. It’s worth mentioning that its data frame transformations have been included in one of the basic methods of data loading for many modern data warehouses. Image by author.

ThoughtSpot

MAY 31, 2023

All communication across tenant-specific compute instances, the common services, and external interaction with your cloud data warehouse are secured over the transport layer security (TLS) channel. Search and model assist hints are stored in the tenant specific cloud storage bucket.

Cloudera

SEPTEMBER 15, 2022

The consumption of the data should be supported through an elastic delivery layer that aligns with demand, but also provides the flexibility to present the data in a physical format that aligns with the analytic application, ranging from the more traditional data warehouse view to a graph view in support of relationship analysis.

Monte Carlo

JULY 19, 2023

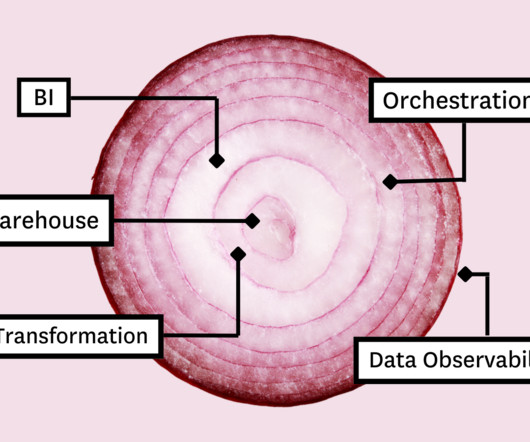

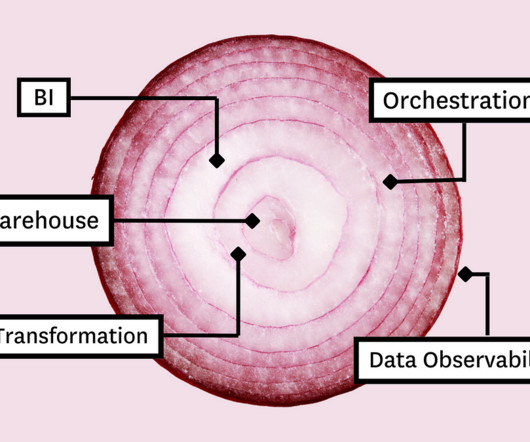

In this article, we’ll present you with the Five Layer Data Stack—a model for platform development consisting of five critical tools that will not only allow you to maximize impact but empower you to grow with the needs of your organization. Before you can model the data for your stakeholders, you need a place to collect and store it.

Cloudera

OCTOBER 26, 2020

*For clarity, the scope of the current certification covers CDP-Private Cloud Base. Certification of CDP-Private Cloud Experiences will be considered in the future. The certification process is designed to validate Cloudera products on a variety of Cloud, Storage & Compute Platforms. Encryption.

Cloudera

SEPTEMBER 23, 2022

Hardware (compute and storage) : As with PaaS data lakehouses, the CDP One data lakehouse resides in the cloud and uses virtualized compute. SaaS data lakehouse size and shape is automatically determined for you. You pay for the compute power and storage you use to drive your analytics.

Towards Data Science

APRIL 26, 2023

The purpose is simple: we want to show that we can develop directly against the cloud while minimizing the cognitive overhead of designing and building infrastructure. Plus, we will put together a design that minimizes costs compared to modern data warehouses, such as Big Query or Snowflake. Image from the authors.

Towards Data Science

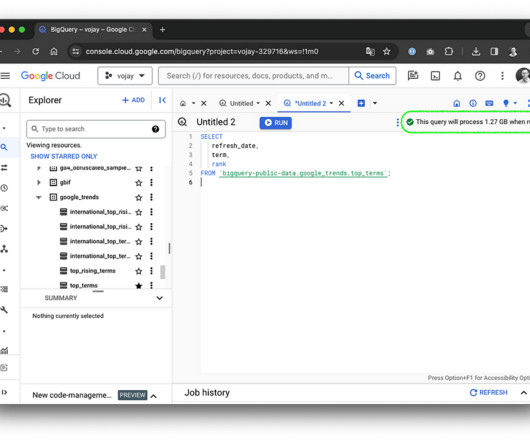

MARCH 5, 2024

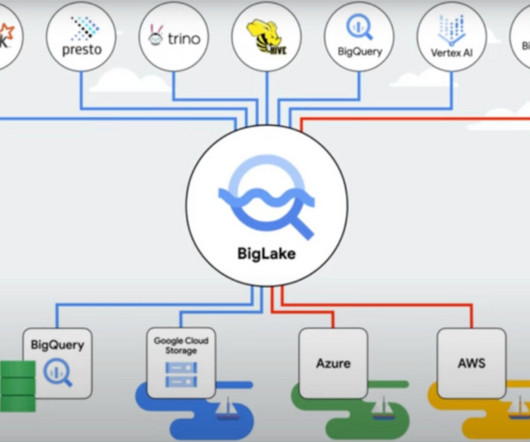

BigQuery basics and understanding costs BigQuery is not just a tool but a package of scalable compute and storage technologies, with fast network, everything managed by Google. At its core, BigQuery is a serverless Data Warehouse for analytical purposes and built-in features like Machine Learning ( BigQuery ML ).

RandomTrees

SEPTEMBER 6, 2020

Snowflake Overview A data warehouse is a critical part of any business organization. Lot of cloud-based data warehouses are available in the market today, out of which let us focus on Snowflake. Snowflake is an analytical data warehouse that is provided as Software-as-a-Service (SaaS).

Cloudera

SEPTEMBER 28, 2021

The Ranger Authorization Service (RAZ) is a new service added to help provide fine-grained access control (FGAC) for cloud storage. RAZ for S3 and RAZ for ADLS introduce FGAC and Audit on CDP’s access to files and directories in cloud storage making it consistent with the rest of the SDX data entities.

Cloudera

OCTOBER 20, 2020

In addition, a lot of work has also been put into ensuring that Impala runs optimally in decoupled compute scenarios, where the data lives in object storage or remote HDFS. With this new change, the key operations in a query can be scaled vertically within a node if the input data is large enough (i.e.

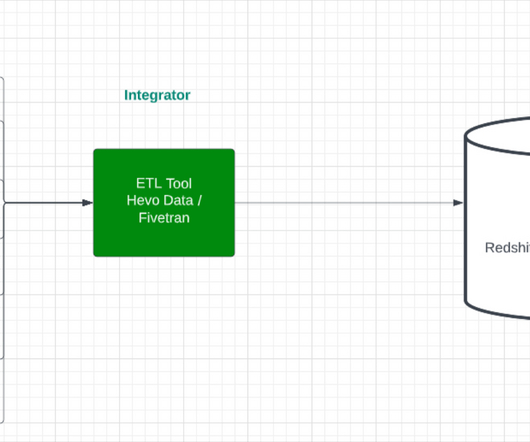

Hevo

JUNE 6, 2024

With the emergence of Cloud Data Warehouses, enterprises are gradually moving towards Cloud storage leaving behind their On-premise Storage systems. Amazon Web Services is one such Cloud Computing platform that offers Amazon Redshift as their Cloud Data Warehouse product. […]

Monte Carlo

FEBRUARY 25, 2025

ELT: When to Transform Your Data ETL (Extract, Transform, Load) ELT (Extract, Load, Transform) Which One Should You Choose? Batch vs. Stream Processing: How to Move Your Data Batch Processing Stream Processing Which One Should You Choose? Data Lakes vs. Data Warehouses: Where Should Your Data Live?

Towards Data Science

JULY 21, 2023

In this article, we’ll present you with the Five Layer Data Stack — a model for platform development consisting of five critical tools that will not only allow you to maximize impact but empower you to grow with the needs of your organization. Before you can model the data for your stakeholders, you need a place to collect and store it.

Cloudera

JULY 15, 2019

After taking this course, you’ll understand how databases provide structure to data and how this has changed as the volume and variety of data have increased. You’ll compare operational and analytic databases and learn what differentiates a modern distributed data warehouse.

Cloudyard

JUNE 16, 2024

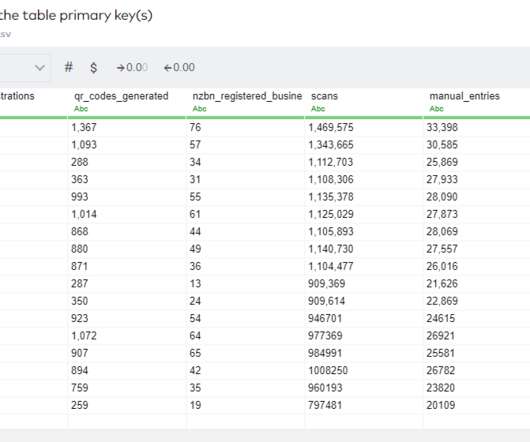

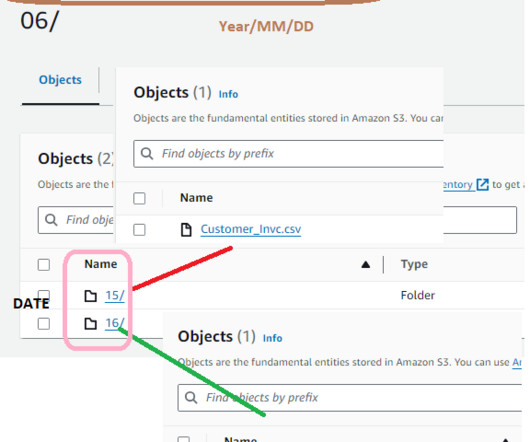

These files need to be ingested into a data warehouse like Snowflake for further processing and analysis. Automating this process ensures data is consistently and reliably loaded without manual intervention. Suppose you are a data engineer at a company that receives daily sales data from an external vendor.

Towards Data Science

DECEMBER 15, 2023

Taking a hard look at data privacy puts our habits and choices in a different context, however. Data scientists’ instincts and desires often work in tension with the needs of data privacy and security. Anyone who’s fought to get access to a database or data warehouse in order to build a model can relate.

Monte Carlo

APRIL 24, 2023

By accommodating various data types, reducing preprocessing overhead, and offering scalability, data lakes have become an essential component of modern data platforms , particularly those serving streaming or machine learning use cases. See our post: Data Lakes vs. Data Warehouses.

Cloudera

FEBRUARY 7, 2019

ATB Financial also now runs 40 nodes of HDP on its’ Google Cloud Platform (GCP) — as well as an HDF cluster — as an ingest framework to shift data from an on-premises data warehouse into its HDP cloud cluster for storage and processing.

ProjectPro

JANUARY 24, 2023

With the global cloud data warehousing market likely to be worth $10.42 billion by 2026, cloud data warehousing is now more critical than ever. Cloud data warehouses offer significant benefits to organizations, including faster real-time insights, higher scalability, and lower overhead expenses.

Cloudera

MAY 18, 2021

Multi-Cloud Management. Single-cloud visibility with Cloudera Manager. Single-cloud visibility with Ambari. Policy-Driven Cloud Storage Permissions. Experience configuration / use case deployment: At the data lifecycle experience level (e.g., data streaming, data engineering, data warehousing etc.),

phData: Data Engineering

APRIL 4, 2023

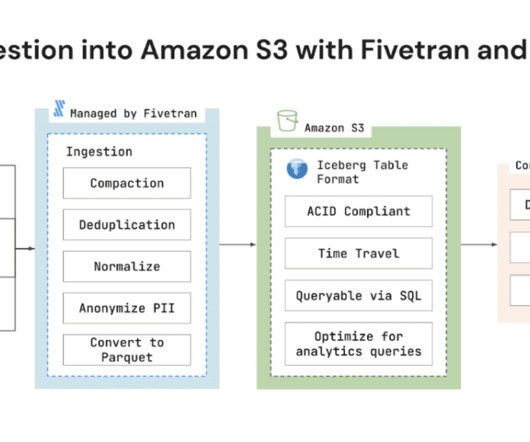

Customers who don’t necessarily want to put their data directly into a data warehouse like the Snowflake Data Cloud can now use Fivetran to build a performant, governed, managed dataset on top of S3 which can still be efficiently queried and manipulated from within their query engine of choice.

Rockset

MARCH 5, 2021

Understanding the space-time tradeoff in data analytics In computer science, a space-time tradeoff is a way of solving a problem or calculation in less time by using more storage space, or by solving a problem in very little space by spending a long time. However for each query it needs to scan your data.

Monte Carlo

FEBRUARY 15, 2023

Cloud data warehouses solve these problems. Belonging to the category of OLAP (online analytical processing) databases, popular data warehouses like Snowflake, Redshift and Big Query can query one billion rows in less than a minute. What is a data warehouse?

Expert insights. Personalized for you.

We have resent the email to

Are you sure you want to cancel your subscriptions?

Let's personalize your content