Setting up Data Lake on GCP using Cloud Storage and BigQuery

Analytics Vidhya

FEBRUARY 25, 2023

The need for a data lake arises from the growing volume, variety, and velocity of data companies need to manage and analyze.

This site uses cookies to improve your experience. To help us insure we adhere to various privacy regulations, please select your country/region of residence. If you do not select a country, we will assume you are from the United States. Select your Cookie Settings or view our Privacy Policy and Terms of Use.

Cookies and similar technologies are used on this website for proper function of the website, for tracking performance analytics and for marketing purposes. We and some of our third-party providers may use cookie data for various purposes. Please review the cookie settings below and choose your preference.

Used for the proper function of the website

Used for monitoring website traffic and interactions

Cookies and similar technologies are used on this website for proper function of the website, for tracking performance analytics and for marketing purposes. We and some of our third-party providers may use cookie data for various purposes. Please review the cookie settings below and choose your preference.

Analytics Vidhya

FEBRUARY 25, 2023

The need for a data lake arises from the growing volume, variety, and velocity of data companies need to manage and analyze.

DataKitchen

NOVEMBER 5, 2024

The Bronze layer is the initial landing zone for all incoming raw data, capturing it in its unprocessed, original form. This foundational layer is a repository for various data types, from transaction logs and sensor data to social media feeds and system logs.

This site is protected by reCAPTCHA and the Google Privacy Policy and Terms of Service apply.

ProjectPro

JUNE 6, 2025

Most of us have observed that data scientist is usually labeled the hottest job of the 21st century, but is it the only most desirable job? No, that is not the only job in the data world. Store the data in in Google Cloud Storage to ensure scalability and reliability. Data transformation and cleaning techniques.

ProjectPro

JUNE 6, 2025

However, the modern data ecosystem encompasses a mix of unstructured and semi-structured data—spanning text, images, videos, IoT streams, and more—these legacy systems fall short in terms of scalability, flexibility, and cost efficiency. That’s where data lakes come in.

ProjectPro

JUNE 6, 2025

Think of the data integration process as building a giant library where all your data's scattered notebooks are organized into chapters. You define clear paths for data to flow, from extraction (gathering structured/unstructured data from different systems) to transformation (cleaning the raw data, processing the data, etc.)

ProjectPro

JUNE 6, 2025

Top 3 Azure Databricks Delta Lake Project Ideas for Practice The following are a few projects involving Delta lake: ETL on Movies data This project involves ingesting data from Kafka and building Medallion architecture ( bronze, silver, and gold layers) Data Lakehouse. What format does Delta lake use for storing data?

ProjectPro

JUNE 6, 2025

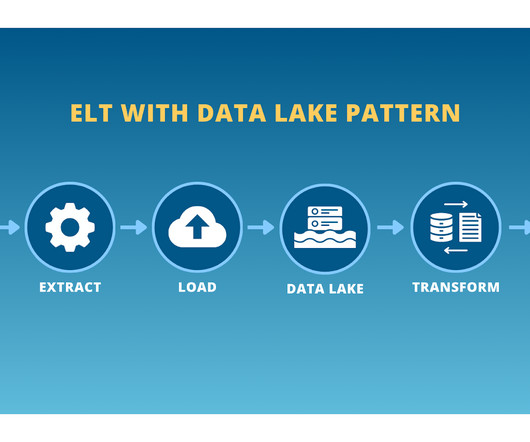

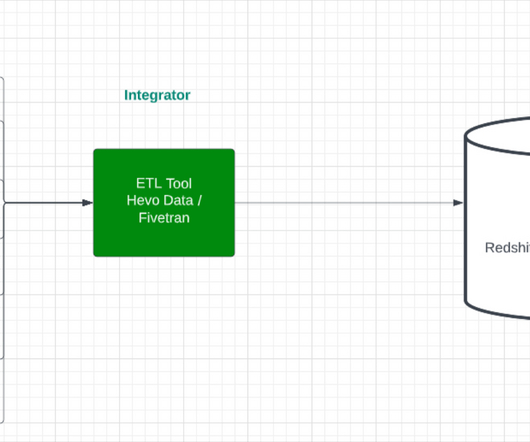

ELT involves three core stages- Extract- Importing data from the source server is the initial stage in this process. Load- The pipeline copies data from the source into the destination system, which could be a data warehouse or a data lake. Scalability ELT can be highly adaptable when using raw data.

ProjectPro

JUNE 6, 2025

Keeping data in data warehouses or data lakes helps companies centralize the data for several data-driven initiatives. While data warehouses contain transformed data, data lakes contain unfiltered and unorganized raw data.

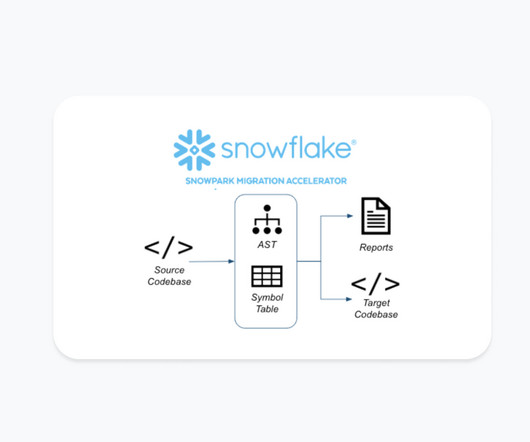

Snowflake

JUNE 20, 2024

This is ideal for tasks such as data aggregation, reporting or batch predictions. Ingestion Pipelines : Handling data from cloud storage and dealing with different formats can be efficiently managed with the accelerator.

ProjectPro

JUNE 6, 2025

Storage And Persistence Layer Once processed, the data is stored in this layer. Stream processing engines often have in-memory storage for temporary data, while durable storage solutions like Apache Hadoop, Amazon S3, or Google Cloud Storage serve as repositories for long-term storage of processed data.

ProjectPro

JUNE 6, 2025

Your SQL skills as a data engineer are crucial for data modeling and analytics tasks. Making data accessible for querying is a common task for data engineers. Collecting the raw data, cleaning it, modeling it, and letting their end users access the clean data are all part of this process.

Edureka

APRIL 14, 2025

Microsoft offers a leading solution for business intelligence (BI) and data visualization through this platform. It empowers users to build dynamic dashboards and reports, transforming raw data into actionable insights. This allows seamless data movement and end-to-end workflows within the same environment.

ProjectPro

JUNE 6, 2025

Data Engineers usually opt for database management systems for database management and their popular choices are MySQL, Oracle Database, Microsoft SQL Server, etc. When working with real-world data, it may only sometimes be the case that the information is stored in rows and columns.

Knowledge Hut

DECEMBER 26, 2023

Look for AWS Cloud Practitioner Essentials Training online to learn the fundamentals of AWS Cloud Computing and become an expert in handling the AWS Cloud platform. Informatica Informatica is a leading industry tool used for extracting, transforming, and cleaning up raw data. and more 2.

ProjectPro

JUNE 6, 2025

Using the Mainframe Connector, you can submit BigQuery jobs from mainframe-based batch jobs specified by job control language (JCL) and upload data to Cloud Storage. Register ORC as an external table in Cloud Storage after uploading it there. This necessitates data consolidation.

ProjectPro

JUNE 6, 2025

Investing time to understand the data can prevent errors later in AI development. Data Cleaning Data cleaning is essential to remove errors and inconsistencies from the raw data. With thorough data cleaning, any insights drawn from the data could be improved, leading to accurate predictions.

Cloudera

JANUARY 21, 2021

Of high value to existing customers, Cloudera’s Data Warehouse service has a unique, separated architecture. . Separate storage. Cloudera’s Data Warehouse service allows raw data to be stored in the cloud storage of your choice (S3, ADLSg2). Get your data in place. S3 bucket).

ProjectPro

JUNE 6, 2025

Data Factory fully supports CI/CD of your data pipelines using Azure DevOps and GitHub. i) We should use the compression option to get the data in a compressed mode while loading from on-prem servers, which is then de-compressed while writing on the cloud storage.

ProjectPro

JUNE 6, 2025

Therefore, data engineers must gain a solid understanding of these Big Data tools. Machine Learning Machine learning helps speed up the processing of humongous data by identifying trends and patterns. It is possible to classify raw data using machine learning algorithms , identify trends, and turn data into insights.

ProjectPro

JUNE 6, 2025

Data wrangling is as essential to the data science process as the sun is important for plants to complete the process of photosynthesis. Data wrangling involves extracting the most valuable information from the data per a business's objectives and requirements. in a different column.

Cloudera

SEPTEMBER 15, 2022

The data products are packaged around the business needs and in support of the business use cases. This step requires curation, harmonization, and standardization from the raw data into the products. Ramsey International Modern Data Platform Architecture.

ProjectPro

JUNE 6, 2025

When you create an index, the data and embeddings are stored in a structured format. Persisting these indexes saves them to a storage medium (local storage or database) for reuse without reprocessing raw data every time. This is crucial for the efficiency and scalability of large language model applications.

Precisely

OCTOBER 5, 2023

According to the 2023 Data Integrity Trends and Insights Report , published in partnership between Precisely and Drexel University’s LeBow College of Business, 77% of data and analytics professionals say data-driven decision-making is the top goal of their data programs. That’s where data enrichment comes in.

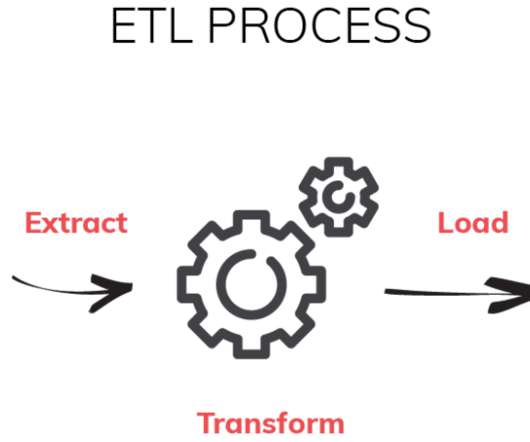

ProjectPro

JUNE 6, 2025

Here are the critical components of an ETL data pipeline: Data Sources: Data sources are the starting point of an ETL pipeline. These can be diverse and may include databases, cloud storage, application logs, external APIs, and more. Data Extraction: Extraction is the first step of the ETL process.

WeCloudData

OCTOBER 19, 2021

Conclusion WeCloudData helped a client build a flexible data pipeline to address the needs from multiple business units requiring different sets, views and timelines of job market data.

WeCloudData

OCTOBER 19, 2021

Conclusion WeCloudData helped a client build a flexible data pipeline to address the needs from multiple business units requiring different sets, views and timelines of job market data.

ProjectPro

JUNE 6, 2025

Imagine being at the forefront of transforming raw data into actionable insights, seamlessly deploying and managing machine learning models. Familiarity with cloud computing fundamentals (any cloud platform). With the global Machine Learning Operations (MLOps) market size likely to reach USD75.42

Monte Carlo

FEBRUARY 25, 2025

Banks, healthcare systems, and financial reporting often rely on ETL to maintain highly structured, trustworthy data from the start. ELT (Extract, Load, Transform) ELT flips the orderstoring raw data first and applying transformations later. Common solutions include AWS S3 , Azure Data Lake , and Google Cloud Storage.

ProjectPro

JUNE 6, 2025

Data Lake vs Data Warehouse - Data Timeline Data lakes retain all data, including data that is not currently in use. Hence, data can be kept in data lakes for all times, to be usfurther analyse the data. Raw data is allowed to flow into a data lake, sometimes with no immediate use.

Ascend.io

DECEMBER 14, 2022

Low in Visibility End-users won’t be able to access all the data in the final destination, only the data that was transformed and loaded. First, every transformation performed on the data pushes you further from the raw data and obscures some of the underlying information. This causes two issues.

Meltano

OCTOBER 5, 2022

What Is Data Engineering? Data engineering is the process of designing systems for collecting, storing, and analyzing large volumes of data. Put simply, it is the process of making raw data usable and accessible to data scientists, business analysts, and other team members who rely on data.

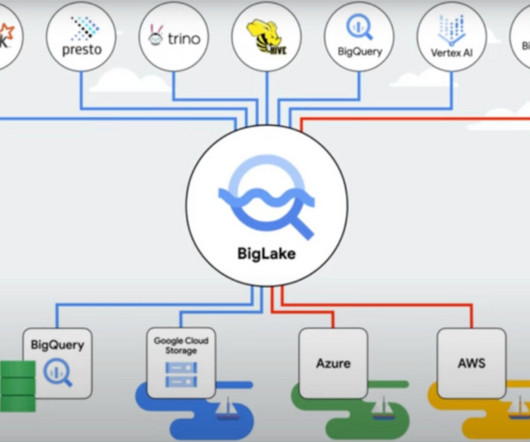

Monte Carlo

APRIL 24, 2023

By accommodating various data types, reducing preprocessing overhead, and offering scalability, data lakes have become an essential component of modern data platforms , particularly those serving streaming or machine learning use cases. Google Cloud Platform and/or BigLake Google offers a couple options for building data lakes.

Ascend.io

FEBRUARY 23, 2024

If your core data systems are still running in a private data center or pushed to VMs in the cloud, you have some work to do. To take advantage of cloud-native services, some of your data must be replicated, copied, or otherwise made available to native cloud storage and databases.

Workfall

JULY 18, 2023

In the vast realm of data engineering and analytics, a tool emerged that felt like a magical elixir. DBT , the Data Build Tool. Think of DBT as the trusty sidekick that accompanies data analysts and engineers on their quests to transform raw data into golden insights.

U-Next

SEPTEMBER 7, 2022

Autonomous data warehouse from Oracle. . What is Data Lake? . Essentially, a data lake is a repository of raw data from disparate sources. A data lake stores current and historical data similar to a data warehouse. Gen 2 Azure Data Lake Storage . Synapse on Microsoft Azure. .

AltexSoft

MAY 12, 2023

These robust security measures ensure that data is always secure and private. There are several widely used unstructured data storage solutions such as data lakes (e.g., Amazon S3, Google Cloud Storage, Microsoft Azure Blob Storage), NoSQL databases (e.g., Hadoop, Apache Spark).

ProjectPro

JUNE 6, 2025

Data Collection & Preprocessing Gather historical sales data, product demand reports, and macroeconomic indicators. Clean and preprocess raw data, handle missing values and seasonality trends. Data Collection & Preprocessing Aggregate historical sales, suppliers, and warehouse raw data.

Knowledge Hut

FEBRUARY 2, 2024

Cloud Computing Course As more and more businesses from various fields are starting to rely on digital data storage and database management, there is an increased need for storage space. And what better solution than cloud storage?

ProjectPro

JUNE 6, 2025

Excels stores data points in each cell in its most basic format. Any numerical data, such as sales data, are input into a spreadsheet for better visibility and management. The raw data will be arranged in an accessible manner by a successful Excel spreadsheet, making it simpler to get actionable insights.

Confluent

OCTOBER 16, 2019

There’s also some static reference data that is published on web pages. ?After Wrangling the data. With the raw data in Kafka, we can now start to process it. Since we’re using Kafka, we are working on streams of data. SELECT * FROM TRAIN_CANCELLATIONS_00 ; Data sinks.

Ascend.io

AUGUST 31, 2023

Read More: What is ETL? – (Extract, Transform, Load) ELT for the Data Lake Pattern As discussed earlier, data lakes are highly flexible repositories that can store vast volumes of raw data with very little preprocessing. Their task is straightforward: take the raw data and transform it into a structured, coherent format.

Monte Carlo

AUGUST 25, 2023

While data lake vendors are constantly emerging to provide more managed services — like Databricks’ Delta Lake, Dremio, and even Snowflake — traditionally, data lakes have been created by combining various technologies. Storage can utilize S3, Google Cloud Storage, Microsoft Azure Blob Storage, or Hadoop HDFS.

Knowledge Hut

NOVEMBER 16, 2023

Some of these skills are a part of your data science expertise and the remaining as part of cloud proficiency. Data Pre-processing Data pre-processing is the preliminary step towards any data science application. Azure Storage is a cloud storage solution that enables us to store and access data in the cloud.

Monte Carlo

FEBRUARY 15, 2023

Cleaning Bad data can derail an entire company, and the foundation of bad data is unclean data. Therefore it’s of immense importance that the data that enters a data warehouse needs to be cleaned. Key Functions of a Data Warehouse Any data warehouse should be able to load data, transform data, and secure data.

Expert insights. Personalized for you.

We have resent the email to

Are you sure you want to cancel your subscriptions?

Let's personalize your content