Interesting startup idea: benchmarking cloud platform pricing

The Pragmatic Engineer

OCTOBER 17, 2024

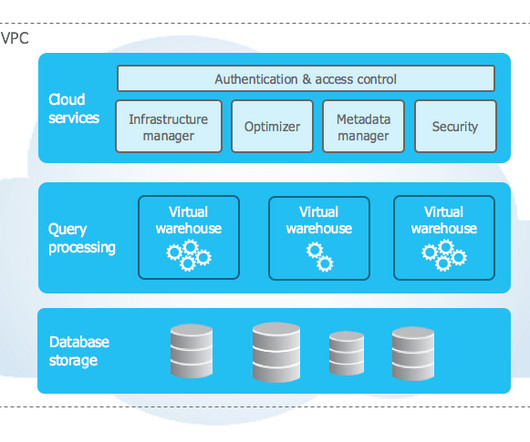

There is an increasing number of cloud providers offering the ability to rent virtual machines, the largest being AWS, GCP, and Azure. Other popular services include Oracle Cloud Infrastructure (OCI), Germany-based Hetzner, France-headquartered OVH, and Scaleway. Creating a viable business from cloud benchmarking.

Let's personalize your content