How to become Azure Data Engineer I Edureka

Edureka

FEBRUARY 7, 2023

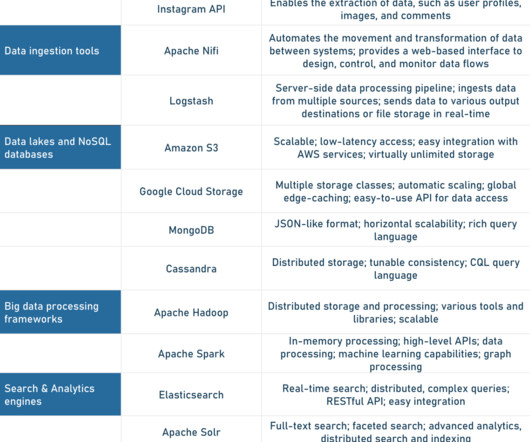

An Azure Data Engineer is responsible for designing, implementing, and maintaining data management and data processing systems on the Microsoft Azure cloud platform. They work with large and complex data sets and are responsible for ensuring that data is stored, processed, and secured efficiently and effectively.

Let's personalize your content