Beyond the Data Complexity: Building Agile, Reusable Data Architectures

The Modern Data Company

JULY 29, 2024

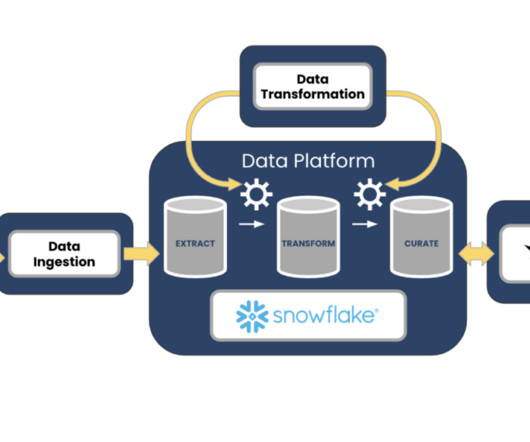

BCG research reveals a striking trend: the number of unique data vendors in large companies has nearly tripled over the past decade, growing from about 50 to 150. This dramatic increase in vendors hasn’t led to the expected data revolution. The limited reusability of data assets further exacerbates this agility challenge.

Let's personalize your content