The Challenge of Data Quality and Availability—And Why It’s Holding Back AI and Analytics

Striim

APRIL 18, 2025

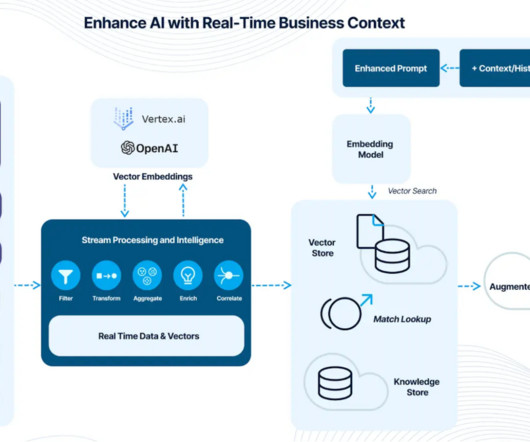

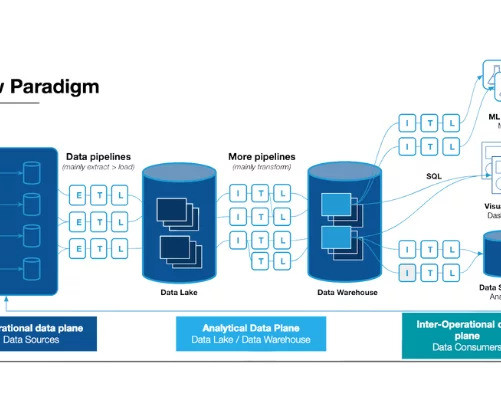

Without high-quality, available data, companies risk misinformed decisions, compliance violations, and missed opportunities. Why AI and Analytics Require Real-Time, High-Quality Data To extract meaningful value from AI and analytics, organizations need data that is continuously updated, accurate, and accessible.

Let's personalize your content