They Handle 500B Events Daily. Here’s Their Data Engineering Architecture.

Monte Carlo

NOVEMBER 12, 2024

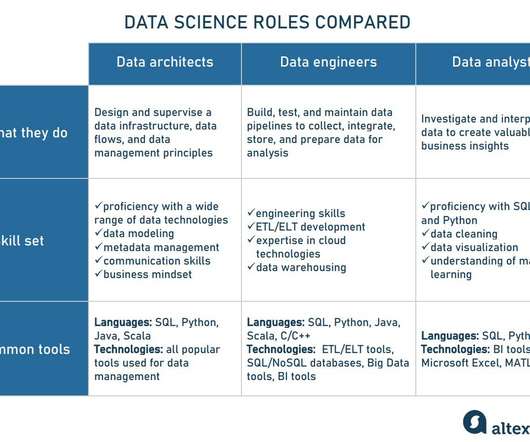

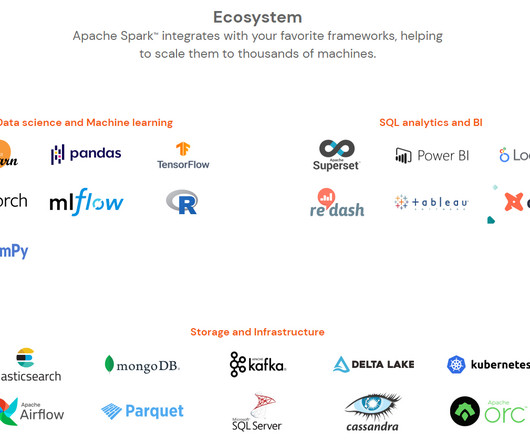

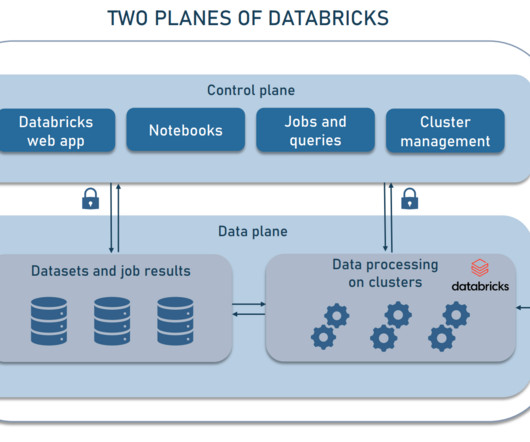

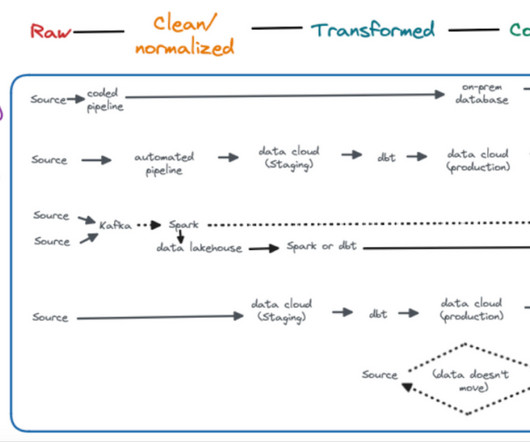

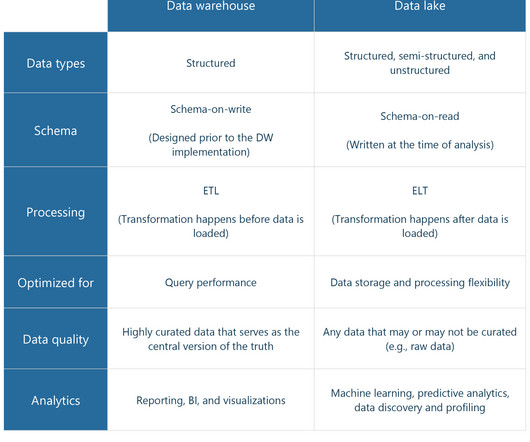

A data engineering architecture is the structural framework that determines how data flows through an organization – from collection and storage to processing and analysis. It’s the big blueprint we data engineers follow in order to transform raw data into valuable insights.

Let's personalize your content