What is data processing analyst?

Edureka

AUGUST 2, 2023

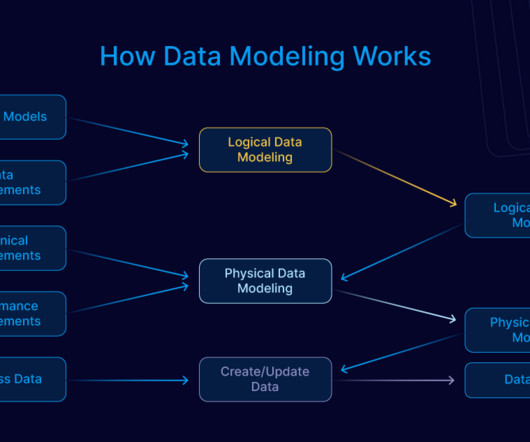

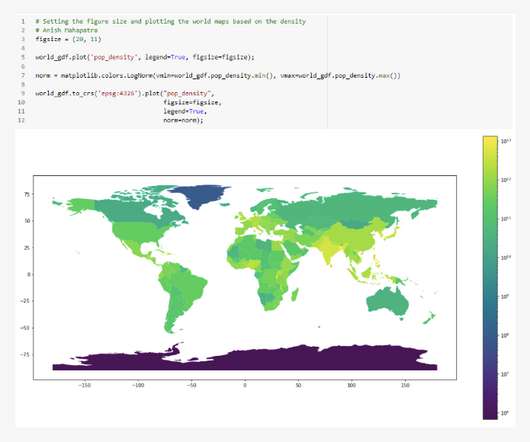

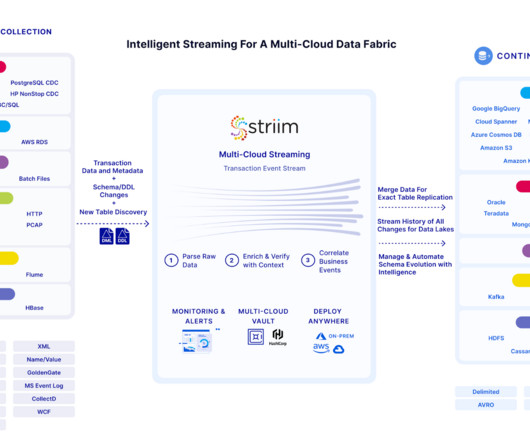

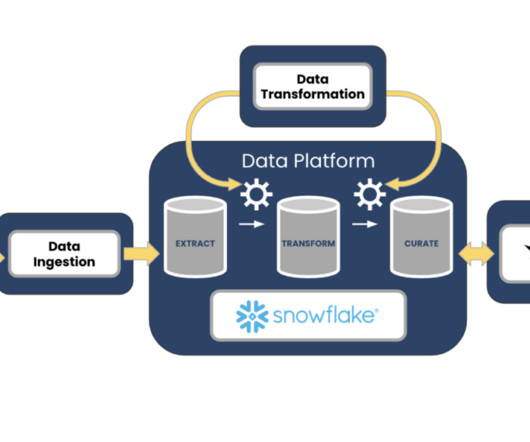

Raw data, however, is frequently disorganised, unstructured, and challenging to work with directly. Data processing analysts can be useful in this situation. Let’s take a deep dive into the subject and look at what we’re about to study in this blog: Table of Contents What Is Data Processing Analysis?

Let's personalize your content