Deploying AI to Enhance Data Quality and Reliability

Ascend.io

SEPTEMBER 6, 2024

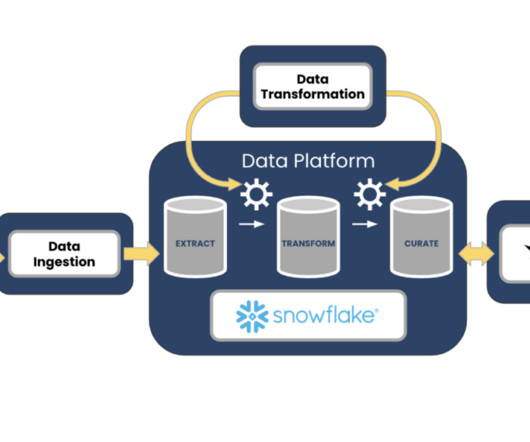

AI-driven data quality workflows deploy machine learning to automate data cleansing, detect anomalies, and validate data. Integrating AI into data workflows ensures reliable data and enables smarter business decisions. Data quality is the backbone of successful data engineering projects.

Let's personalize your content