Top 6 Microsoft HDFS Interview Questions

Analytics Vidhya

MARCH 5, 2023

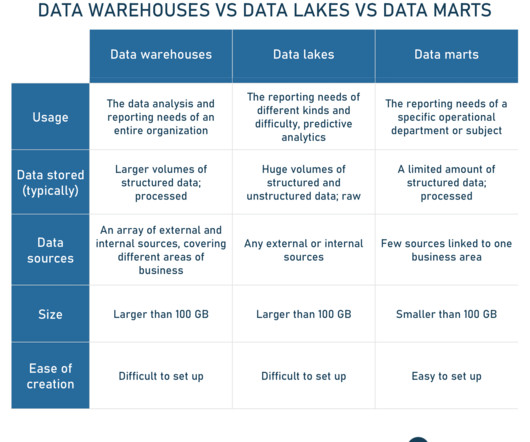

Introduction Microsoft Azure HDInsight(or Microsoft HDFS) is a cloud-based Hadoop Distributed File System version. A distributed file system runs on commodity hardware and manages massive data collections. It is a fully managed cloud-based environment for analyzing and processing enormous volumes of data.

Let's personalize your content