Gain an AI Advantage with Data Governance and Quality

Precisely

AUGUST 29, 2024

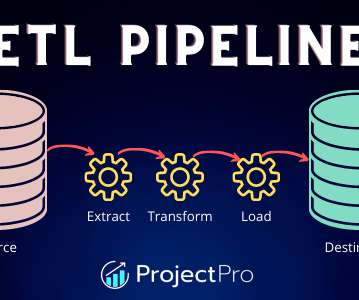

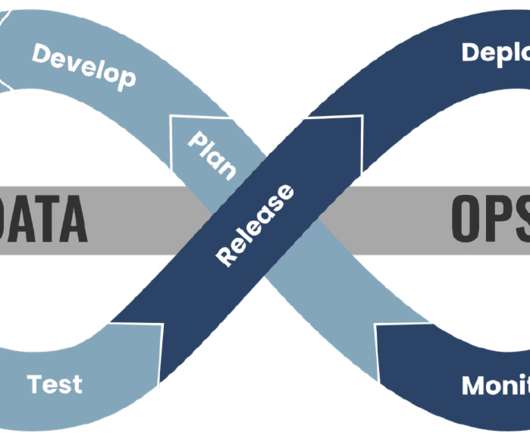

Key Takeaways Data quality ensures your data is accurate, complete, reliable, and up to date – powering AI conclusions that reduce costs and increase revenue and compliance. Data observability continuously monitors data pipelines and alerts you to errors and anomalies. stored: where is it located?

Let's personalize your content