How I Optimized Large-Scale Data Ingestion

databricks

SEPTEMBER 6, 2024

Explore being a PM intern at a technical powerhouse like Databricks, learning how to advance data ingestion tools to drive efficiency.

This site uses cookies to improve your experience. To help us insure we adhere to various privacy regulations, please select your country/region of residence. If you do not select a country, we will assume you are from the United States. Select your Cookie Settings or view our Privacy Policy and Terms of Use.

Cookies and similar technologies are used on this website for proper function of the website, for tracking performance analytics and for marketing purposes. We and some of our third-party providers may use cookie data for various purposes. Please review the cookie settings below and choose your preference.

Used for the proper function of the website

Used for monitoring website traffic and interactions

Cookies and similar technologies are used on this website for proper function of the website, for tracking performance analytics and for marketing purposes. We and some of our third-party providers may use cookie data for various purposes. Please review the cookie settings below and choose your preference.

Data Ingestion Related Topics

Data Ingestion Related Topics

databricks

SEPTEMBER 6, 2024

Explore being a PM intern at a technical powerhouse like Databricks, learning how to advance data ingestion tools to drive efficiency.

KDnuggets

APRIL 6, 2022

Learn tricks on importing various data formats using Pandas with a few lines of code. We will be learning to import SQL databases, Excel sheets, HTML tables, CSV, and JSON files with examples.

This site is protected by reCAPTCHA and the Google Privacy Policy and Terms of Service apply.

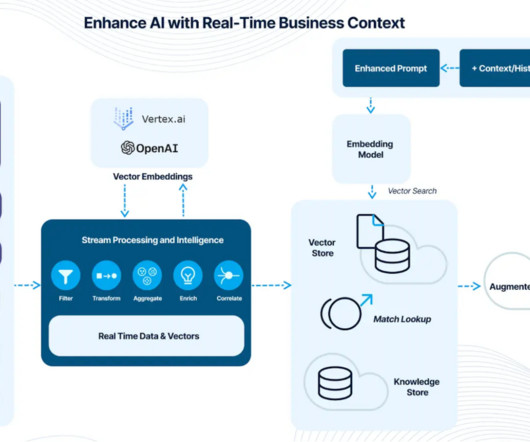

Going Beyond Chatbots: Connecting AI to Your Tools, Systems, & Data

Smart Tech + Human Expertise = How to Modernize Manufacturing Without Losing Control

Netflix Tech

MARCH 7, 2023

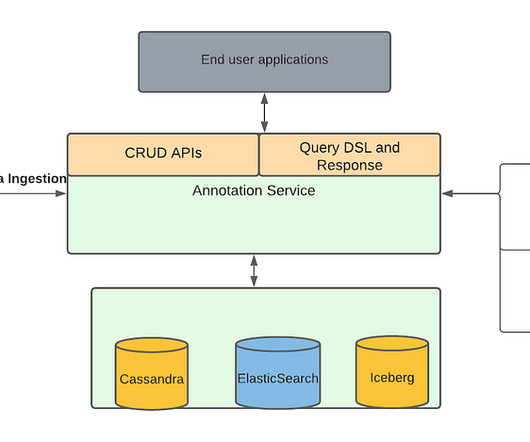

Data ingestion pipeline with Operation Management was originally published in Netflix TechBlog on Medium, where people are continuing the conversation by highlighting and responding to this story. For example, they can store the annotations in a blob storage like S3 and give us a link to the file as part of the single API.

Scribd Technology

JANUARY 14, 2025

In a recent session with the Delta Lake project I was able to share the work led Kuntal Basu and a number of other people to dramatically improve the efficiency and reliability of our online data ingestion pipeline. as they take you behind the scenes of Scribds data ingestion setup.

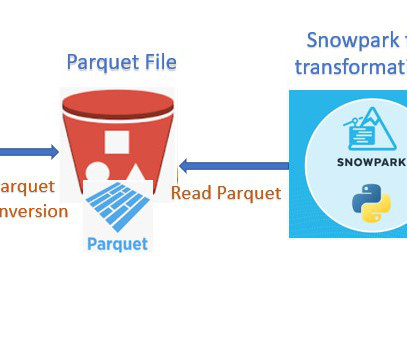

Cloudyard

JUNE 6, 2023

Snowflake Output Happy 0 0 % Sad 0 0 % Excited 0 0 % Sleepy 0 0 % Angry 0 0 % Surprise 0 0 % The post Data Ingestion with Glue and Snowpark appeared first on Cloudyard. Technical Implementation: GLUE Job.

Hevo

FEBRUARY 23, 2025

Organizations generate tons of data every second, yet 80% of enterprise data remains unstructured and unleveraged (Unstructured Data). Organizations need data ingestion and integration to realize the complete value of their data assets.

Hevo

FEBRUARY 23, 2025

Organizations generate tons of data every second, yet 80% of enterprise data remains unstructured and unleveraged (Unstructured Data). Organizations need data ingestion and integration to realize the complete value of their data assets.

KDnuggets

SEPTEMBER 11, 2024

Learn how to create a data science pipeline with a complete structure.

databricks

DECEMBER 10, 2024

Data engineering teams are frequently tasked with building bespoke ingestion solutions for myriad custom, proprietary, or industry-specific data sources. Many teams find that.

Analytics Vidhya

MARCH 7, 2023

Introduction Apache Flume is a tool/service/data ingestion mechanism for gathering, aggregating, and delivering huge amounts of streaming data from diverse sources, such as log files, events, and so on, to centralized data storage. Flume is a tool that is very dependable, distributed, and customizable.

Hevo

APRIL 26, 2024

To accommodate lengthy processes on such data, companies turn toward Data Pipelines which tend to automate the work of extracting data, transforming it and storing it in the desired location. In the working of such pipelines, Data Ingestion acts as the […]

Confluent

JANUARY 22, 2024

The new fully managed BigQuery Sink V2 connector for Confluent Cloud offers streamlined data ingestion and cost-efficiency. Learn about the Google-recommended Storage Write API and OAuth 2.0 support.

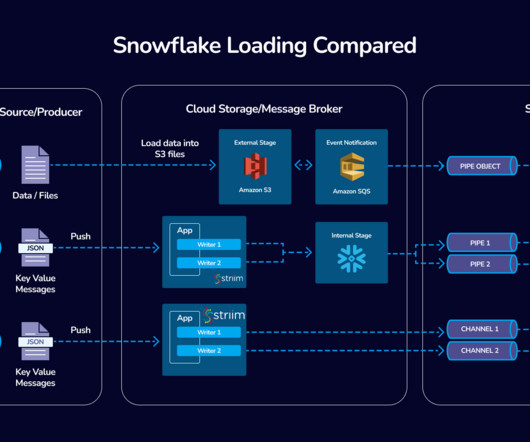

Striim

NOVEMBER 13, 2023

Introduction In the fast-evolving world of data integration, Striim’s collaboration with Snowflake stands as a beacon of innovation and efficiency. Striim’s integration with Snowpipe Streaming represents a significant advancement in real-time data ingestion into Snowflake.

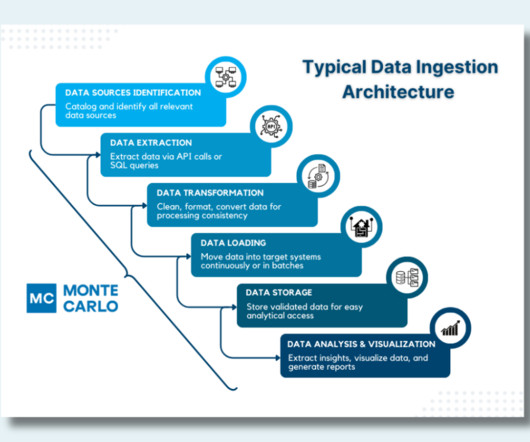

Monte Carlo

MAY 28, 2024

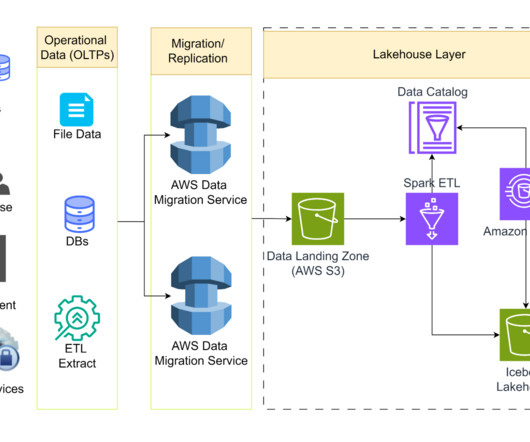

A data ingestion architecture is the technical blueprint that ensures that every pulse of your organization’s data ecosystem brings critical information to where it’s needed most. A typical data ingestion flow. Popular Data Ingestion Tools Choosing the right ingestion technology is key to a successful architecture.

databricks

MARCH 29, 2024

Overview In the competitive world of professional hockey, NHL teams are always seeking to optimize their performance. Advanced analytics has become increasingly important.

Analytics Vidhya

FEBRUARY 20, 2023

Introduction Azure data factory (ADF) is a cloud-based data ingestion and ETL (Extract, Transform, Load) tool. The data-driven workflow in ADF orchestrates and automates data movement and data transformation.

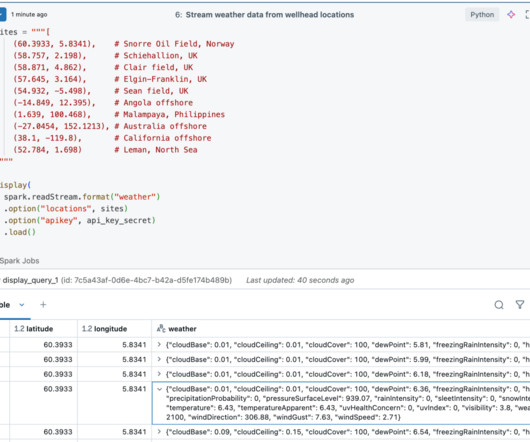

Snowflake

MARCH 2, 2023

This solution is both scalable and reliable, as we have been able to effortlessly ingest upwards of 1GB/s throughput.” Rather than streaming data from source into cloud object stores then copying it to Snowflake, data is ingested directly into a Snowflake table to reduce architectural complexity and reduce end-to-end latency.

Monte Carlo

FEBRUARY 20, 2024

At the heart of every data-driven decision is a deceptively simple question: How do you get the right data to the right place at the right time? The growing field of data ingestion tools offers a range of answers, each with implications to ponder. Fivetran Image courtesy of Fivetran.

DataKitchen

NOVEMBER 5, 2024

You have typical data ingestion layer challenges in the bronze layer: lack of sufficient rows, delays, changes in schema, or more detailed structural/quality problems in the data. Data missing or incomplete at various stages is another critical quality issue in the Medallion architecture.

Rockset

MARCH 1, 2023

When you deconstruct the core database architecture, deep in the heart of it you will find a single component that is performing two distinct competing functions: real-time data ingestion and query serving. When data ingestion has a flash flood moment, your queries will slow down or time out making your application flaky.

KDnuggets

JULY 29, 2024

Learn to build the end-to-end data science pipelines from data ingestion to data visualization using Pandas pipe method.

Snowflake

JUNE 13, 2024

But at Snowflake, we’re committed to making the first step the easiest — with seamless, cost-effective data ingestion to help bring your workloads into the AI Data Cloud with ease. Like any first step, data ingestion is a critical foundational block. Ingestion with Snowflake should feel like a breeze.

Cloudera

DECEMBER 4, 2024

For more than a decade, Cloudera has been an ardent supporter and committee member of Apache NiFi, long recognizing its power and versatility for data ingestion, transformation, and delivery.

databricks

MAY 23, 2024

We're excited to announce native support in Databricks for ingesting XML data. XML is a popular file format for representing complex data.

Cloudyard

NOVEMBER 12, 2024

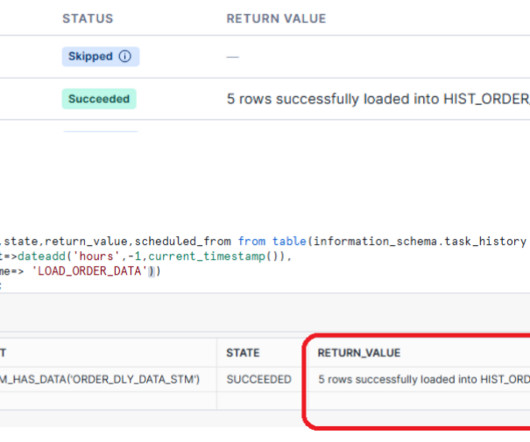

This approach not only minimizes costs but also maximizes efficiency by performing essential operations only when new data is available. This use case will walk through the setup of a Snowflake task called LOAD_ORDER_DATA, which performs automated data ingestion and validation.

Data Engineering Weekly

MARCH 5, 2025

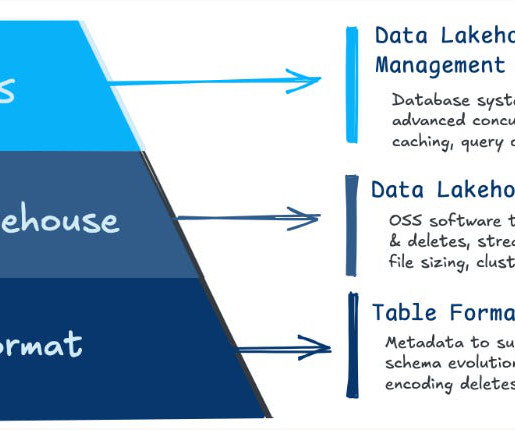

While the Iceberg itself simplifies some aspects of data management, the surrounding ecosystem introduces new challenges: Small File Problem (Revisited): Like Hadoop, Iceberg can suffer from small file problems. Data ingestion tools often create numerous small files, which can degrade performance during query execution.

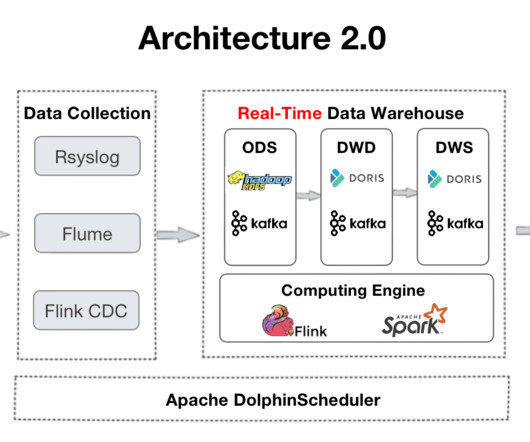

KDnuggets

SEPTEMBER 1, 2023

This article describes a large-scale data warehousing use case to provide reference for data engineers who are looking for log analytic solutions. It introduces the log processing architecture and real-case practice in data ingestion, storage, and queries.

Hevo

APRIL 19, 2024

A fundamental requirement for any data-driven organization is to have a streamlined data delivery mechanism. With organizations collecting data at a rate like never before, devising data pipelines for adequate flow of information for analytics and Machine Learning tasks becomes crucial for businesses.

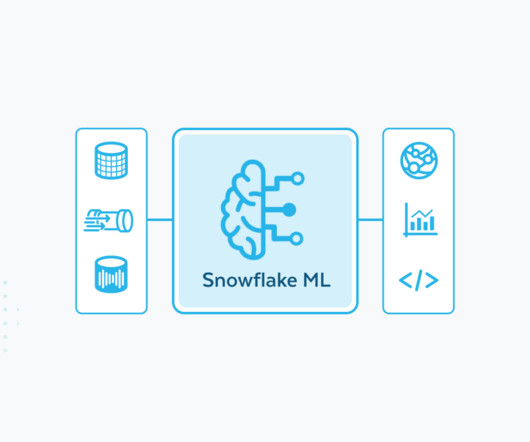

Snowflake

MARCH 31, 2025

A set of CPU- and GPU-specific images, pre-installed with the latest and most popular libraries and frameworks (PyTorch, XGBoost, LightGBM, scikit-learn and many more ) supporting ML development, so data scientists can simply spin up a Snowflake Notebook and dive right into their work.

Hevo

JULY 17, 2024

Every data-centric organization uses a data lake, warehouse, or both data architectures to meet its data needs. Data Lakes bring flexibility and accessibility, whereas warehouses bring structure and performance to the data architecture.

Data Engineering Weekly

JANUARY 8, 2025

Apache Hudi's unique differentiators, such as its ability to handle complex data operations asynchronously, set it apart. For example, Hudi excels in scenarios requiring large-scale data ingestion with transactional guarantees, a feature critical for the finance, healthcare, and retail industries.

Edureka

APRIL 9, 2025

Programming Languages: Hands-on experience with SQL, Kusto Query Language (KQL), and Data Analysis Expressions ( DAX ). Data Ingestion and Management: Good practices for data ingestion and management within the Fabric environment. Proper preparation is crucial for success and career growth in cloud-based analytics.

Cloudyard

APRIL 22, 2025

Read Time: 2 Minute, 34 Second Introduction In modern data pipelines, especially in cloud data platforms like Snowflake, data ingestion from external systems such as AWS S3 is common.

Snowflake

APRIL 9, 2025

SoFlo Solar SoFlo Solars SolarSync platform uses real-time AI data analytics and ML to transform underperforming residential solar systems into high-uptime clean energy assets, providing homeowners with savings while creating a virtual power plant network that delivers measurable value to utilities and grid operators.

Team Data Science

JUNE 6, 2020

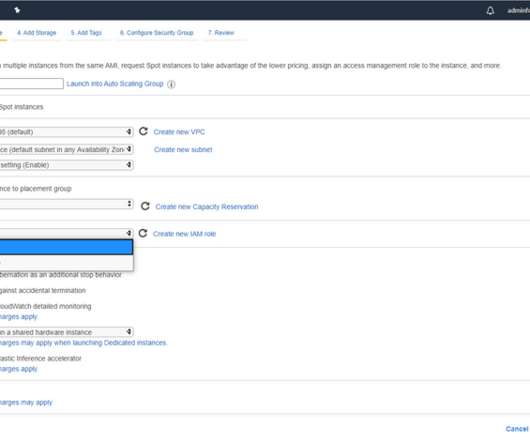

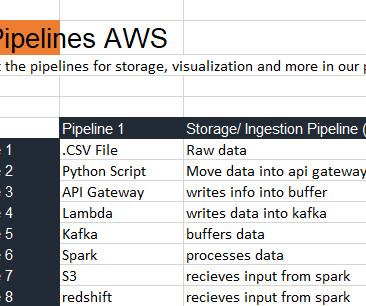

Welcome back to this Toronto Specific data engineering project. We left off last time concluding finance has the largest demand for data engineers who have skills with AWS, and sketched out what our data ingestion pipeline will look like. I began building out the data ingestion pipeline by launching an EC2 instance.

Cloudyard

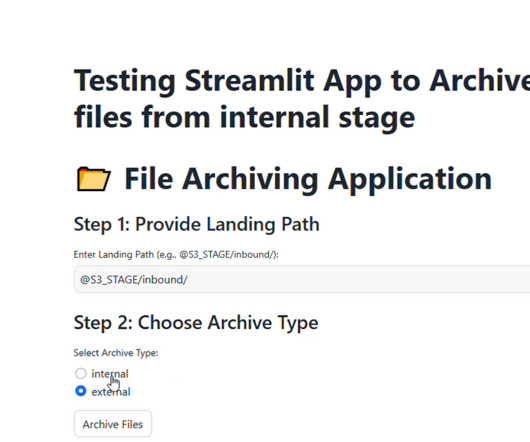

DECEMBER 18, 2024

Handling feed files in data pipelines is a critical task for many organizations. These files, often stored in stages such as Amazon S3 or Snowflake internal stages, are the backbone of data ingestion workflows. Without a proper archival strategy, these files can clutter staging areas, leading to operational challenges.

Team Data Science

MAY 10, 2020

I can now begin drafting my data ingestion/ streaming pipeline without being overwhelmed. With careful consideration and learning about your market, the choices you need to make become narrower and more clear.

Pinterest Engineering

APRIL 7, 2025

In the case during the instance migration, even though the measured network throughput was well below the baseline bandwidth, we still see TCP retransmits to spike during bulk data ingestion into EC2. In the database service, the application reads data (e.g.

KDnuggets

APRIL 29, 2022

Top-rated data science tracks consist of multiple project-based courses covering all aspects of data. It includes an introduction to Python/R, data ingestion & manipulation, data visualization, machine learning, and reporting.

Snowflake

OCTOBER 3, 2023

We are excited to announce the availability of data pipelines replication, which is now in public preview. In the event of an outage, this powerful new capability lets you easily replicate and failover your entire data ingestion and transformations pipelines in Snowflake with minimal downtime.

Snowflake

JUNE 4, 2024

Faster, easier ingest To make data ingestion even more cost effective and effortless, Snowflake is announcing performance improvements of up to 25% for loading JSON files, and for loading Parquet files, up to 50%. Getting data ingested now only takes a few clicks, and the data is encrypted.

Data Engineering Podcast

NOVEMBER 20, 2022

report having current investments in automation, 85% of data teams plan on investing in automation in the next 12 months. The Ascend Data Automation Cloud provides a unified platform for data ingestion, transformation, orchestration, and observability. In fact, while only 3.5% That’s where our friends at Ascend.io

Striim

APRIL 18, 2025

Siloed storage : Critical business data is often locked away in disconnected databases, preventing a unified view. Delayed data ingestion : Batch processing delays insights, making real-time decision-making impossible.

KDnuggets

APRIL 13, 2022

Python Libraries Data Scientists Should Know in 2022; Naïve Bayes Algorithm: Everything You Need to Know; Data Ingestion with Pandas: A Beginner Tutorial; Data Science Interview Guide - Part 1: The Structure; 5 Ways to Expand Your Knowledge in Data Science Beyond Online Courses.

Expert insights. Personalized for you.

We have resent the email to

Are you sure you want to cancel your subscriptions?

Let's personalize your content