What is Real-time Data Ingestion? Use cases, Tools, Infrastructure

Knowledge Hut

JULY 3, 2023

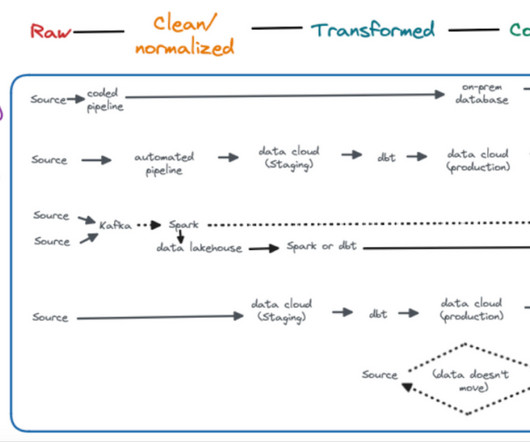

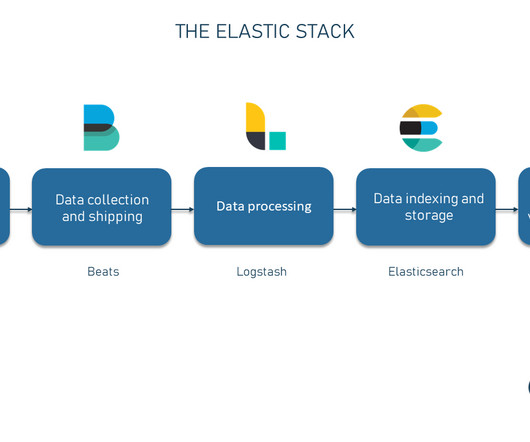

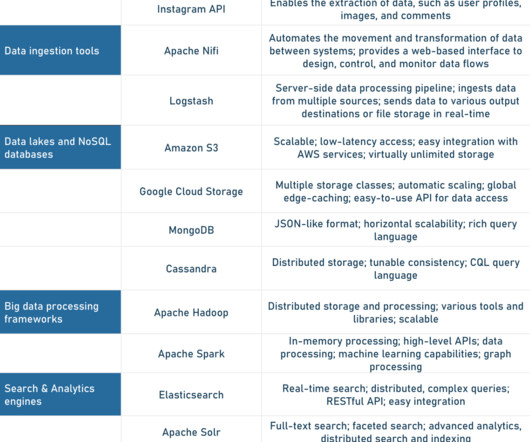

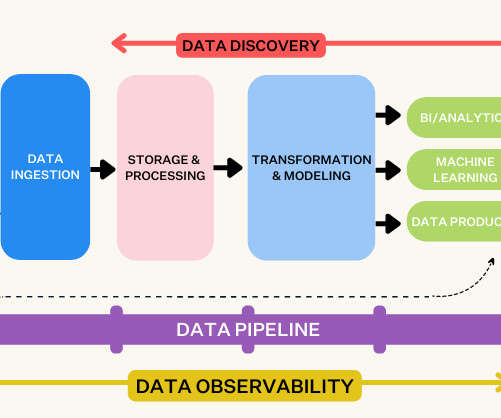

Conventional batch processing techniques seem incomplete in fulfilling the demand of driving the commercial environment. This is where real-time data ingestion comes into the picture. Data is collected from various sources such as social media feeds, website interactions, log files and processing.

Let's personalize your content