The Race For Data Quality in a Medallion Architecture

DataKitchen

NOVEMBER 5, 2024

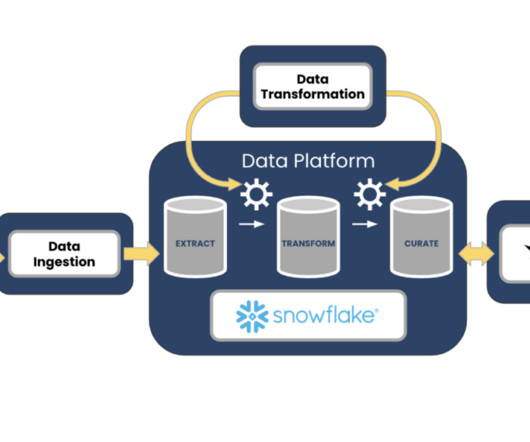

Bronze layers can also be the raw database tables. Next, data is processed in the Silver layer , which undergoes “just enough” cleaning and transformation to provide a unified, enterprise-wide view of core business entities. For instance, suppose a new dataset from an IoT device is meant to be ingested daily into the Bronze layer.

Let's personalize your content