Data Ingestion vs Data Integration: What Is the Right Approach for Your Business

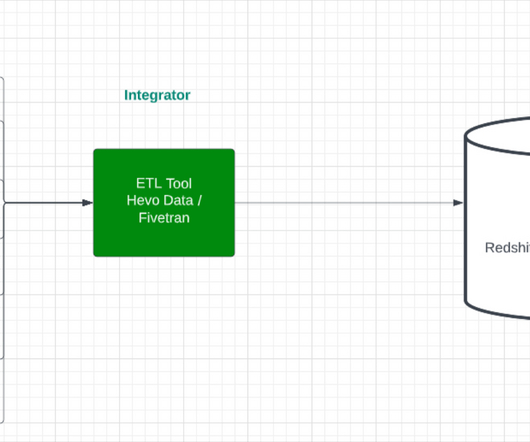

Hevo

FEBRUARY 23, 2025

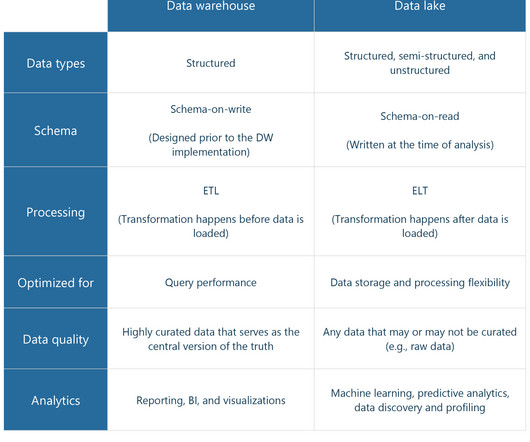

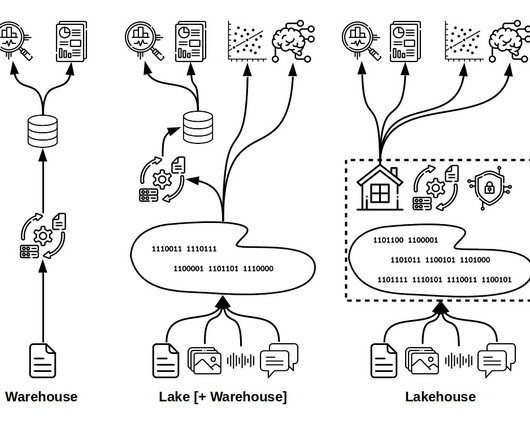

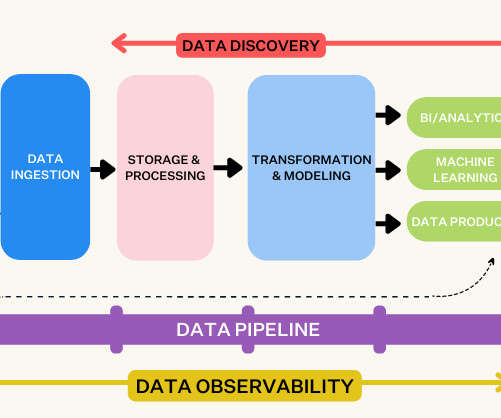

Organizations generate tons of data every second, yet 80% of enterprise data remains unstructured and unleveraged (Unstructured Data). Organizations need data ingestion and integration to realize the complete value of their data assets.

Let's personalize your content