Ingest Data Faster, Easier and Cost-Effectively with New Connectors and Product Updates

Snowflake

JUNE 13, 2024

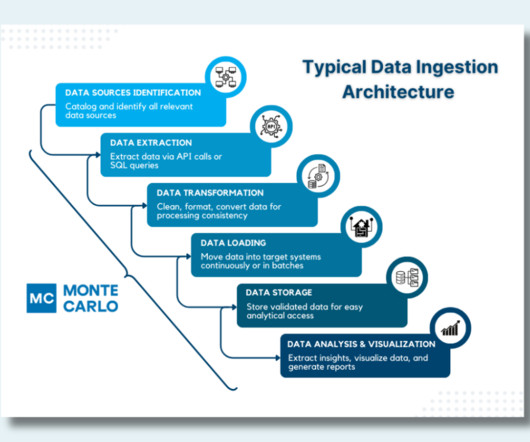

But at Snowflake, we’re committed to making the first step the easiest — with seamless, cost-effective data ingestion to help bring your workloads into the AI Data Cloud with ease. Snowflake is launching native integrations with some of the most popular databases, including PostgreSQL and MySQL.

Let's personalize your content