How to Design a Modern, Robust Data Ingestion Architecture

Monte Carlo

MAY 28, 2024

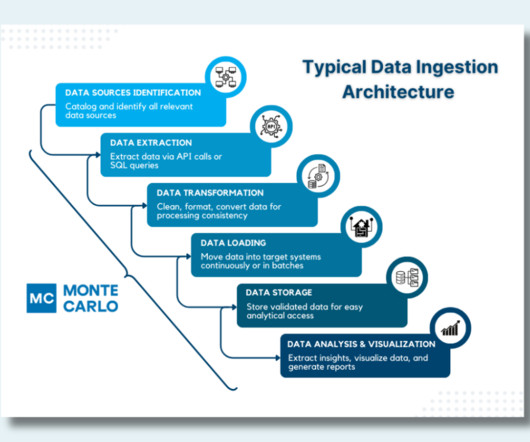

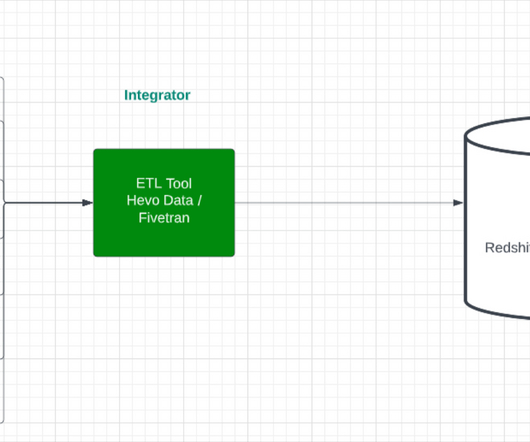

A data ingestion architecture is the technical blueprint that ensures that every pulse of your organization’s data ecosystem brings critical information to where it’s needed most. A typical data ingestion flow. Popular Data Ingestion Tools Choosing the right ingestion technology is key to a successful architecture.

Let's personalize your content