The Race For Data Quality in a Medallion Architecture

DataKitchen

NOVEMBER 5, 2024

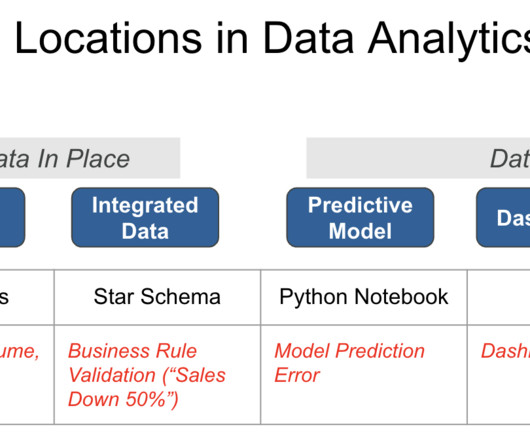

The Race For Data Quality In A Medallion Architecture The Medallion architecture pattern is gaining traction among data teams. It is a layered approach to managing and transforming data. By systematically moving data through these layers, the Medallion architecture enhances the data structure in a data lakehouse environment.

Let's personalize your content