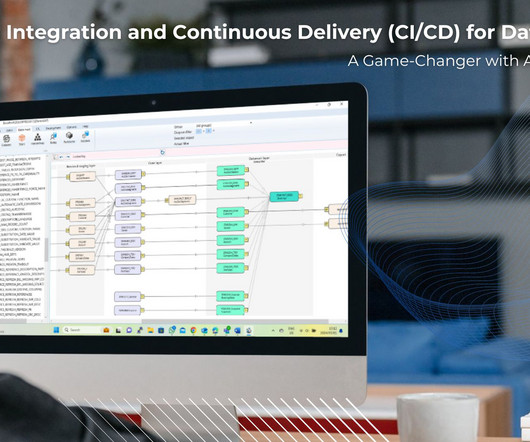

Using Trino And Iceberg As The Foundation Of Your Data Lakehouse

Data Engineering Podcast

FEBRUARY 18, 2024

Summary A data lakehouse is intended to combine the benefits of data lakes (cost effective, scalable storage and compute) and data warehouses (user friendly SQL interface). To start, can you share your definition of what constitutes a "Data Lakehouse"? Closing Announcements Thank you for listening!

Let's personalize your content